Preparing for a machine learning (ML) interview at Anthropic? You’re in the right place. Anthropic, the AI research company behind groundbreaking work in natural language processing (NLP) and AI safety, is one of the most sought-after employers for ML engineers. But landing a job here isn’t easy. Their interview process is rigorous, and they’re looking for candidates who not only understand ML fundamentals but can also apply them creatively to solve real-world problems.

In this blog, we’ll break down the top 25 frequently asked questions in Anthropic ML interviews, complete with detailed answers and pro tips to help you stand out. Whether you’re a seasoned ML engineer or just starting out, this guide will give you the tools you need to ace your interview. Let’s get started!

1. Introduction

If you’re preparing for an ML interview at Anthropic, you’re probably feeling a mix of excitement and nervousness. That’s completely normal. Anthropic is known for pushing the boundaries of AI, and their interview process reflects that. They’re not just testing your knowledge—they’re evaluating how you think, solve problems, and align with their mission of building safe and beneficial AI systems.

This blog is designed to be your ultimate guide. We’ve done the research, talked to candidates who’ve been through the process, and compiled the top 25 questions you’re likely to face. Each question comes with a detailed answer, insights into why it’s asked, and tips to help you shine.

What is Anthropic?

Anthropic is an AI research company focused on developing AI systems that are safe, interpretable, and aligned with human values. Founded by former OpenAI researchers, Anthropic is known for its work on large language models (LLMs) and AI safety. If you’re interviewing here, you’re likely passionate about NLP, deep learning, and the ethical implications of AI.

Why ML Interviews at Anthropic Are Unique

Anthropic’s ML interviews are designed to test both your technical expertise and your ability to think critically about AI’s impact on society. You’ll face questions on everything from foundational ML concepts to cutting-edge NLP techniques. But don’t worry—we’ve got you covered.

2. Understanding Anthropic’s ML Interview Process

Stages of the Interview Process

Anthropic’s interview process typically includes:

Phone Screen: A quick chat with a recruiter to assess your background and fit.

Technical Rounds: Deep dives into ML fundamentals, coding, and system design.

Research Discussion: A conversation about your past projects and research.

Behavioral/Cultural Fit: Questions to assess your alignment with Anthropic’s mission and values.

What Anthropic Looks For

Anthropic is looking for candidates who:

Have a strong grasp of ML fundamentals.

Can apply ML techniques to solve real-world problems.

Are passionate about AI safety and ethics.

Can communicate complex ideas clearly.

How to Prepare

Brush up on ML basics (e.g., supervised learning, neural networks).

Practice coding in Python.

Read Anthropic’s research papers to understand their focus areas.

Prepare for behavioral questions by reflecting on your past experiences.

Top 25 Frequently Asked Questions in Anthropic ML Interviews with Detailed Answers

Category 1: Foundational ML Concepts

1. Explain the bias-variance tradeoff.

Why This Question is Asked: This is a core ML concept that tests your understanding of model performance.

Detailed Answer:

Bias refers to errors due to overly simplistic assumptions in the learning algorithm. High bias can cause underfitting.

Variance refers to errors due to the model’s sensitivity to small fluctuations in the training set. High variance can cause overfitting.

The goal is to find the right balance between bias and variance to minimize total error.Pro Tip: Use examples like linear regression (high bias) and complex neural networks (high variance) to illustrate your point.

2. What is overfitting, and how can you prevent it?

Why This Question is Asked: Overfitting is a common problem in ML, and Anthropic wants to see if you know how to address it.

Detailed Answer:

Overfitting occurs when a model learns the training data too well, including noise and outliers, and performs poorly on new data.

Prevention Techniques:

Use more training data.

Apply regularization (e.g., L1/L2 regularization).

Simplify the model.

Use cross-validation.Pro Tip: Mention how Anthropic’s focus on interpretability ties into avoiding overfitting.

3. What is the difference between supervised and unsupervised learning?

Why This Question is Asked: This tests your understanding of basic ML paradigms.

Detailed Answer:

Supervised Learning: The model is trained on labeled data (e.g., classification, regression).

Unsupervised Learning: The model is trained on unlabeled data to find patterns (e.g., clustering, dimensionality reduction).Pro Tip: Provide examples like spam detection (supervised) and customer segmentation (unsupervised).

4. How do you handle missing data in a dataset?

Why This Question is Asked: Missing data is a common issue in real-world datasets.

Detailed Answer:

Techniques:

Remove rows with missing data (if the dataset is large).

Impute missing values using mean, median, or mode.

Use advanced methods like KNN imputation or predictive modeling.Pro Tip: Discuss the trade-offs of each method.

5. What is cross-validation, and why is it important?

Why This Question is Asked: Cross-validation is a key technique for evaluating model performance.

Detailed Answer:

Cross-validation involves splitting the data into multiple folds, training the model on some folds, and validating it on others.

Importance: It provides a more robust estimate of model performance than a single train-test split.Pro Tip: Mention k-fold cross-validation as a common approach.

Category 2: Deep Learning and Neural Networks

6. How does backpropagation work?

Why This Question is Asked: Backpropagation is the backbone of training neural networks.

Detailed Answer:

Backpropagation is an algorithm used to calculate the gradient of the loss function with respect to each weight in the network.

It works by:

Forward pass: Compute the output.

Calculate the loss.

Backward pass: Compute gradients using the chain rule.

Update weights using gradient descent.Pro Tip: Use a simple neural network diagram to explain the process.

7. What is a transformer model, and how does it work?

Why This Question is Asked: Transformers are at the core of Anthropic’s work in NLP.

Detailed Answer:

A transformer is a model architecture that uses self-attention mechanisms to process input data in parallel.

Key components:

Self-Attention: Weighs the importance of different words in a sentence.

Positional Encoding: Adds information about the position of words.

Feedforward Layers: Process the output of the attention layers.Pro Tip: Discuss how transformers have revolutionized NLP and their role in Anthropic’s research.

8. What is the difference between CNNs and RNNs?

Why This Question is Asked: This tests your understanding of different neural network architectures.

Detailed Answer:

CNNs (Convolutional Neural Networks): Used for grid-like data (e.g., images). They use convolutional layers to extract spatial features.

RNNs (Recurrent Neural Networks): Used for sequential data (e.g., text, time series). They have loops to retain information over time.Pro Tip: Highlight how CNNs are used in computer vision and RNNs in NLP.

9. Explain the concept of attention mechanisms.

Why This Question is Asked: Attention mechanisms are critical in modern NLP models.

Detailed Answer:

Attention allows a model to focus on specific parts of the input when making predictions.

Example: In machine translation, the model pays attention to relevant words in the source sentence when generating each word in the target sentence.Pro Tip: Mention how attention improves model performance and interpretability.

10. What is batch normalization, and why is it used?

Why This Question is Asked: Batch normalization is a key technique for training deep neural networks.

Detailed Answer:

Batch normalization normalizes the inputs of each layer to have a mean of 0 and a standard deviation of 1.

Benefits: It stabilizes training, allows for higher learning rates, and reduces overfitting.Pro Tip: Explain how it works during training and inference.

Category 3: Natural Language Processing (NLP)

11. What is the difference between word2vec and BERT?

Why This Question is Asked: This tests your understanding of NLP model evolution.

Detailed Answer:

Word2Vec: A shallow model that learns word embeddings by predicting surrounding words (CBOW) or predicting a word given its context (Skip-Gram).

BERT: A deep transformer-based model that learns contextualized word embeddings by considering the entire sentence.Pro Tip: Highlight how BERT’s bidirectional context understanding makes it superior for tasks like question answering.

12. How does a language model like GPT generate text?

Why This Question is Asked: GPT models are central to Anthropic’s work.

Detailed Answer:

GPT (Generative Pre-trained Transformer) uses a transformer architecture to predict the next word in a sequence.

It is trained on large text corpora and fine-tuned for specific tasks.Pro Tip: Discuss how GPT’s autoregressive nature enables text generation.

13. What are embeddings, and why are they important in NLP?

Why This Question is Asked: Embeddings are foundational to NLP.

Detailed Answer:

Embeddings are dense vector representations of words or sentences.

Importance: They capture semantic relationships and reduce dimensionality.Pro Tip: Mention popular embedding techniques like word2vec, GloVe, and BERT.

14. Explain the concept of tokenization in NLP.

Why This Question is Asked: Tokenization is a key preprocessing step in NLP.

Detailed Answer:

Tokenization involves splitting text into individual tokens (e.g., words, subwords).

Example: “I love AI” → [“I”, “love”, “AI”].Pro Tip: Discuss challenges like handling punctuation and out-of-vocabulary words.

15. What is the role of positional encoding in transformers?

Why This Question is Asked: Positional encoding is critical for transformers to understand word order.

Detailed Answer:

Positional encoding adds information about the position of words in a sequence to the input embeddings.

Without it, transformers would treat input sequences as unordered sets.Pro Tip: Mention how sinusoidal functions are commonly used for positional encoding.

Category 4: Probability and Statistics

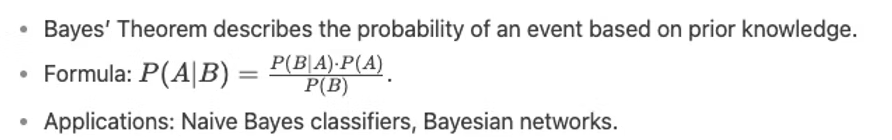

16. What is Bayes’ Theorem, and how is it used in ML?

Why This Question is Asked: Bayes’ Theorem is fundamental to probabilistic models.

Detailed Answer:

Pro Tip: Use a real-world example like spam detection to explain its application.

17. Explain the Central Limit Theorem.

Why This Question is Asked: This tests your understanding of statistical theory.

Detailed Answer:

The Central Limit Theorem states that the distribution of sample means approximates a normal distribution as the sample size increases, regardless of the population’s distribution.Pro Tip: Use an example like rolling dice to illustrate the concept.

18. What is the difference between correlation and causation?

Why This Question is Asked: This tests your ability to interpret data correctly.

Detailed Answer:

Correlation: A statistical relationship between two variables.

Causation: One variable directly affects another.

Example: Ice cream sales and drowning incidents are correlated (both increase in summer), but one does not cause the other.Pro Tip: Emphasize the importance of controlled experiments to establish causation.

19. How do you calculate the p-value, and what does it mean?

Why This Question is Asked: P-values are critical in hypothesis testing.

Detailed Answer:

The p-value is the probability of observing the data (or something more extreme) if the null hypothesis is true.

A low p-value (typically < 0.05) suggests that the null hypothesis can be rejected.Pro Tip: Explain how p-values are used in A/B testing.

20. What is the difference between parametric and non-parametric models?

Why This Question is Asked: This tests your understanding of model types.

Detailed Answer:

Parametric Models: Assume a fixed number of parameters (e.g., linear regression).

Non-Parametric Models: The number of parameters grows with the data (e.g., decision trees).Pro Tip: Discuss the trade-offs in terms of interpretability and flexibility.

Category 5: Coding and Algorithmic Challenges

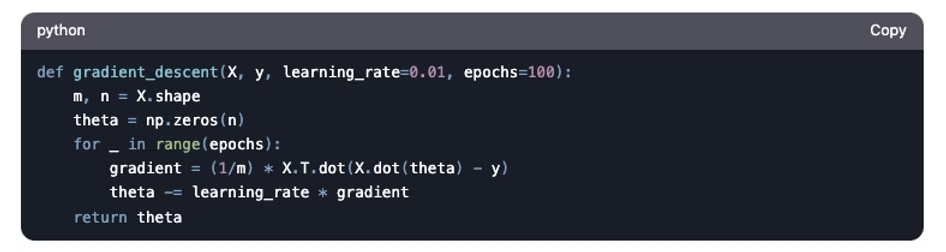

21. Write a Python function to implement gradient descent.

Why This Question is Asked: This tests your coding skills and understanding of optimization.

Detailed Answer:

Pro Tip: Explain how learning rate and epochs affect convergence.

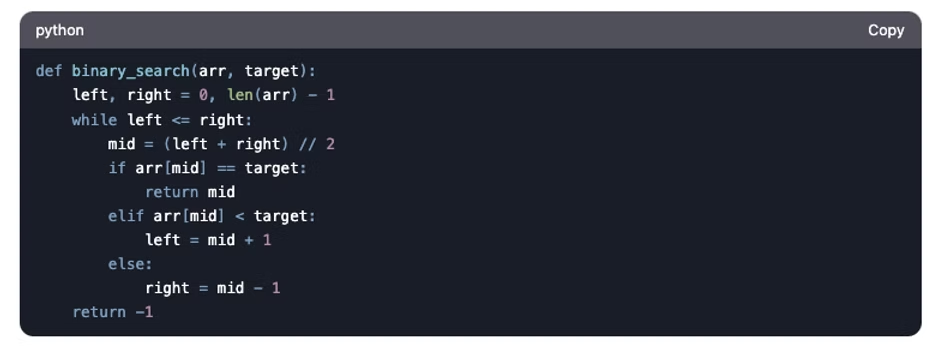

22. How would you implement a binary search algorithm?

Why This Question is Asked: Binary search is a classic algorithm.

Detailed Answer:

Pro Tip: Discuss the time complexity (O(log n)).

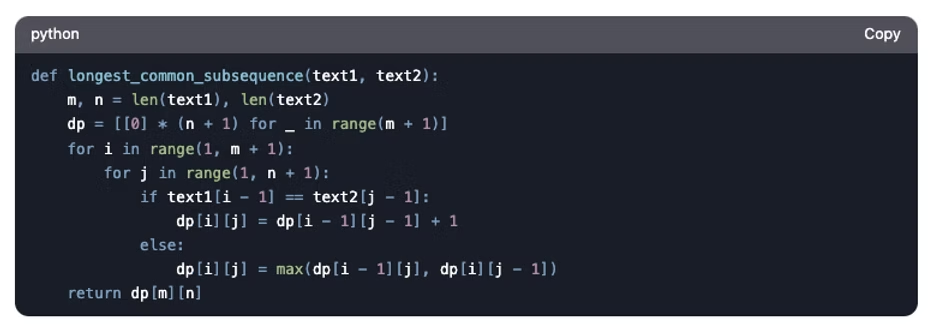

23. Write a function to find the longest common subsequence between two strings.

Why This Question is Asked: This tests your dynamic programming skills.

Detailed Answer:

Pro Tip: Explain the DP table and how it works.

24. How would you optimize a slow-running ML model?

Why This Question is Asked: This tests your problem-solving and optimization skills.

Detailed Answer:

Techniques:

Reduce dataset size (e.g., sampling).

Use feature selection to remove irrelevant features.

Optimize hyperparameters.

Use more efficient algorithms (e.g., gradient boosting instead of neural networks).Pro Tip: Discuss trade-offs between accuracy and speed.

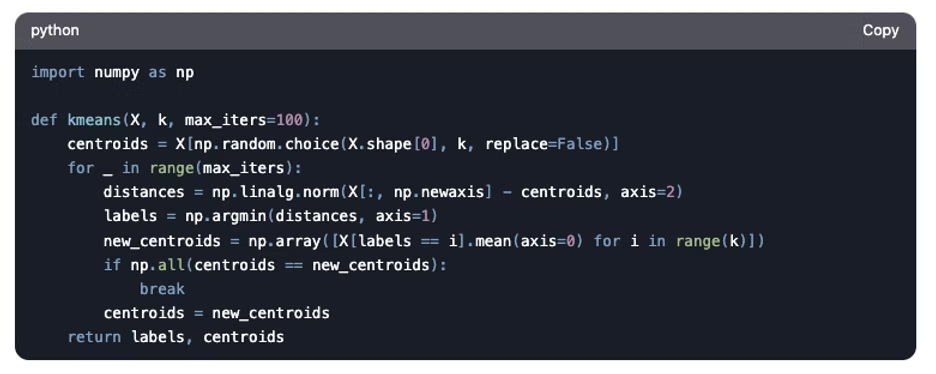

25. Write code to perform k-means clustering from scratch.

Why This Question is Asked: This tests your understanding of clustering algorithms.

Detailed Answer:

Pro Tip: Explain the steps (initialization, assignment, update) and convergence criteria.

4. How to Stand Out in Anthropic ML Interviews

Demonstrate Deep Understanding: Go beyond textbook answers. Show how you’ve applied concepts in real-world projects.

Ask Insightful Questions: For example, “How does Anthropic approach AI safety in its research?”

Show Passion for AI Ethics: Highlight your interest in building safe and beneficial AI systems.

5. Common Mistakes to Avoid

Technical Mistakes: Misapplying concepts or failing to communicate clearly.

Behavioral Mistakes: Not aligning with Anthropic’s values.

Logistical Mistakes: Poor time management during coding challenges.

6. Resources for Further Preparation

Books: “Deep Learning” by Ian Goodfellow.

Courses: Andrew Ng’s ML course on Coursera.

Practice Platforms: LeetCode, Kaggle, InterviewNode.

7. Conclusion

Preparing for an ML interview at Anthropic is challenging but rewarding. With the right preparation and mindset, you can stand out and land your dream job. Use this guide as your roadmap, and don’t forget to check out InterviewNode for personalized interview prep.

8. FAQs

How long should I prepare?: At least 2-3 months.

What if I don’t have a strong NLP background?: Focus on foundational ML concepts and practice coding.

How important is system design?: Very important—be ready to design scalable ML systems.

Good luck with your Anthropic ML interview! Register for our free webinar to know more about how Interview Node could help you succeed.