Landing a machine learning (ML) role at Meta (formerly Facebook) is a dream for many software engineers and data scientists. Meta is at the forefront of AI innovation, powering everything from Instagram’s recommendation systems to Facebook’s newsfeed algorithms. But with great innovation comes a challenging interview process. Meta’s ML interviews are designed to test not only your technical knowledge but also your problem-solving skills, coding abilities, and ability to apply ML concepts to real-world problems.

If you’re preparing for an ML interview at Meta, you’re in the right place. In this blog, we’ll break down the top 25 frequently asked questions in Meta ML interviews, complete with detailed answers and tips to help you ace your interview. At InterviewNode, we specialize in helping software engineers like you prepare for ML interviews at top companies. Let’s dive in!

Understanding Meta’s ML Interview Process

Before we jump into the questions, it’s important to understand Meta’s interview process. Meta’s ML interviews typically consist of the following stages:

Phone Screen: A 45-minute coding interview focusing on data structures and algorithms.

Technical Interviews: These include coding, ML fundamentals, and system design rounds.

Applied ML Interviews: You’ll be asked to solve real-world ML problems or case studies.

Behavioral Interviews: Meta places a strong emphasis on cultural fit, so expect questions about your past experiences and how you handle challenges.

Meta evaluates candidates on four key areas:

Coding Skills: Can you write clean, efficient code under pressure?

ML Fundamentals: Do you understand the core concepts of machine learning?

Problem-Solving: Can you apply ML techniques to solve real-world problems?

Cultural Fit: Are you aligned with Meta’s values and mission?

Now that you know what to expect, let’s explore the top 25 questions you’re likely to encounter in a Meta ML interview.

Category 1: Coding and Algorithms

1. Given an array of integers, find two numbers such that they add up to a specific target number.

Why it’s asked: This question tests your ability to write efficient code and use data structures like hash maps. It’s a common problem that evaluates your problem-solving skills and understanding of time complexity.

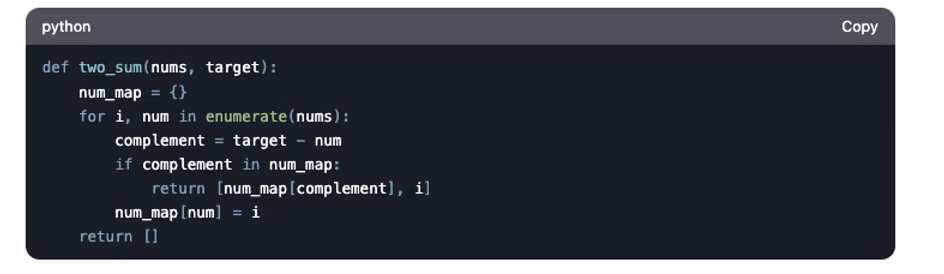

Detailed Answer:The brute-force approach involves checking every pair of numbers in the array to see if they add up to the target. However, this has a time complexity of O(n²), which is inefficient for large datasets. A better approach is to use a hash map (or dictionary) to store the difference between the target and each element as you iterate through the array. This reduces the time complexity to O(n).Here’s how it works:

Initialize an empty hash map.

Iterate through the array. For each element, calculate the complement (target - current element).

Check if the complement exists in the hash map. If it does, return the indices of the current element and its complement.

If the complement doesn’t exist, add the current element and its index to the hash map.

Code Snippet:

Tip: Practice similar problems on platforms like LeetCode to get comfortable with hash maps and their applications.

2. Implement a binary search algorithm.

Why it’s asked: Binary search is a fundamental algorithm that tests your understanding of divide-and-conquer strategies and efficient searching.

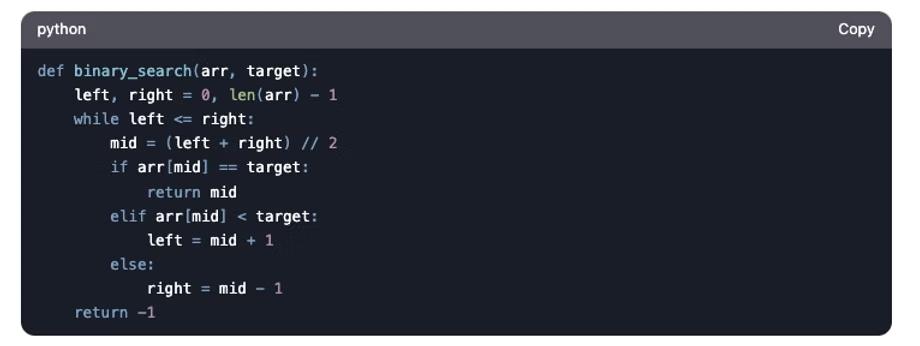

Detailed Answer:Binary search works on sorted arrays by repeatedly dividing the search interval in half. If the value of the search key is less than the item in the middle of the interval, narrow the interval to the lower half. Otherwise, narrow it to the upper half. This process continues until the value is found or the interval is empty.Steps:

Initialize two pointers, left and right, to the start and end of the array.

Calculate the middle index as (left + right) // 2.

Compare the middle element with the target:

If they are equal, return the middle index.

If the middle element is less than the target, move the left pointer to mid + 1.

If the middle element is greater than the target, move the right pointer to mid - 1.

Repeat until left exceeds right.

Code Snippet:

Example:For arr = [1, 3, 5, 7, 9] and target = 5, the function returns 2 because arr[2] = 5.Tip: Be ready to explain the time complexity (O(log n)) and handle edge cases like empty arrays or targets not in the array.

3. Reverse a linked list.

Why it’s asked: This question tests your understanding of pointers and data structures, which are critical for working with dynamic data.

Detailed Answer:Reversing a linked list involves changing the direction of the pointers so that the last node becomes the first node. You can do this iteratively or recursively.Iterative Approach:

Initialize three pointers: prev (to track the previous node), curr (to track the current node), and next_node (to temporarily store the next node).

Traverse the list, updating the next pointer of the current node to point to the previous node.

Move the prev and curr pointers one step forward.

Repeat until curr becomes None.

Code Snippet:

Example:For a linked list 1 -> 2 -> 3 -> 4, the reversed list is 4 -> 3 -> 2 -> 1.Tip: Practice drawing diagrams to visualize pointer manipulation and handle edge cases like empty lists or single-node lists.

4. Find the longest substring without repeating characters.

Why it’s asked: This question evaluates your ability to solve string manipulation problems efficiently using techniques like sliding windows.

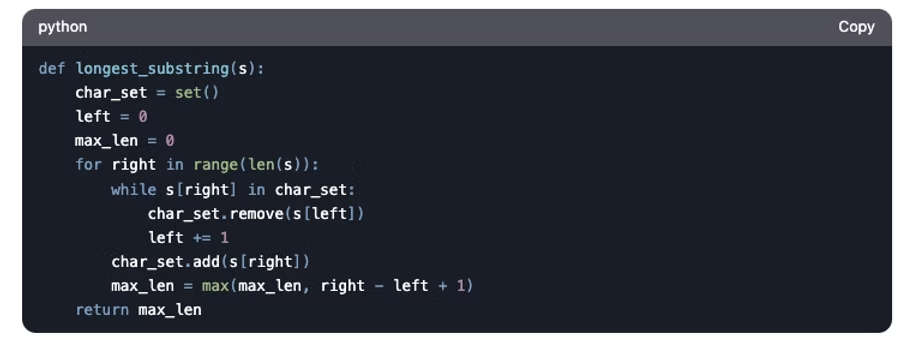

Detailed Answer:The sliding window technique involves maintaining a window of characters that haven’t been repeated. You use two pointers, left and right, to represent the current window. As you iterate through the string, you move the right pointer forward and update the left pointer if a repeating character is found.Steps:

Initialize a hash set to track unique characters and two pointers, left and right, to represent the window.

Move the right pointer forward. If the character at right is not in the set, add it to the set and update the maximum length.

If the character is already in the set, move the left pointer forward and remove characters from the set until the repeating character is no longer in the set.

Repeat until the right pointer reaches the end of the string.

Code Snippet:

Example:For s = "abcabcbb", the function returns 3 because the longest substring without repeating characters is "abc".Tip: Practice sliding window problems to master this technique and handle edge cases like empty strings or strings with all unique characters.

5. Merge k sorted lists.

Why it’s asked: This question tests your ability to work with heaps and merge operations, which are common in real-world applications like merging logs or databases.

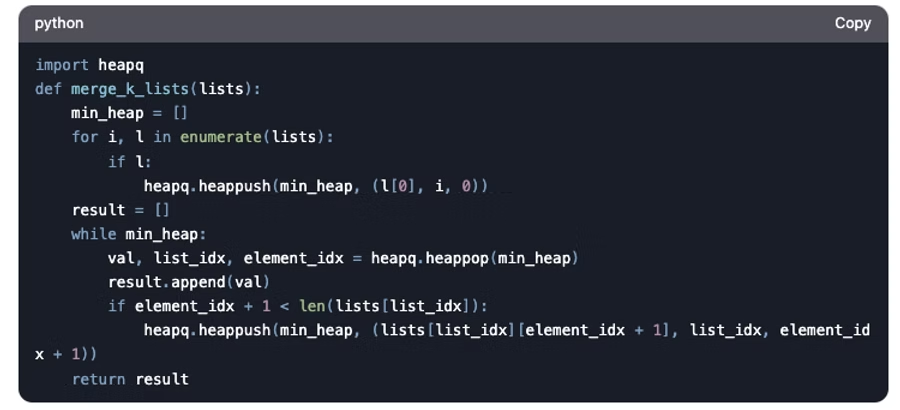

Detailed Answer:Merging k sorted lists efficiently requires using a min-heap (priority queue). The idea is to insert the first element of each list into the heap, then repeatedly extract the smallest element and add the next element from the same list to the heap.Steps:

Initialize a min-heap and insert the first element of each list along with its list index and element index.

Extract the smallest element from the heap and add it to the result.

If there are more elements in the same list, insert the next element into the heap.

Repeat until the heap is empty.

Code Snippet:

ultExample:For lists = [[1, 4, 5], [1, 3, 4], [2, 6]], the function returns [1, 1, 2, 3, 4, 4, 5, 6].Tip: Understand the time complexity (O(n log k)) and practice implementing heaps.

Category 2: Machine Learning Fundamentals

6. What is the bias-variance tradeoff?

Why it’s asked: This question tests your understanding of model performance, overfitting, and underfitting, which are critical concepts in machine learning.

Detailed Answer:The bias-variance tradeoff is a fundamental concept in machine learning that describes the tension between two sources of error in predictive models:

Bias: This is the error due to overly simplistic assumptions in the learning algorithm. High bias can cause an algorithm to miss relevant relations between features and target outputs (underfitting).

Variance: This is the error due to the model’s sensitivity to small fluctuations in the training set. High variance can cause overfitting, where the model captures noise instead of the underlying pattern.

Example:

A linear regression model has high bias because it assumes a linear relationship between features and the target, which may be too simplistic for complex datasets.

A decision tree with no depth limit has high variance because it can grow overly complex and fit the training data too closely, including its noise.

How to Balance Bias and Variance:

Reduce Bias: Use more complex models, add features, or reduce regularization.

Reduce Variance: Use simpler models, apply regularization (e.g., L1/L2), or increase training data.

Tip: Always use cross-validation to evaluate your model’s performance and ensure it generalizes well to unseen data.

7. How does gradient descent work?

Why it’s asked: Gradient descent is the backbone of many machine learning algorithms, and this question evaluates your understanding of optimization techniques.

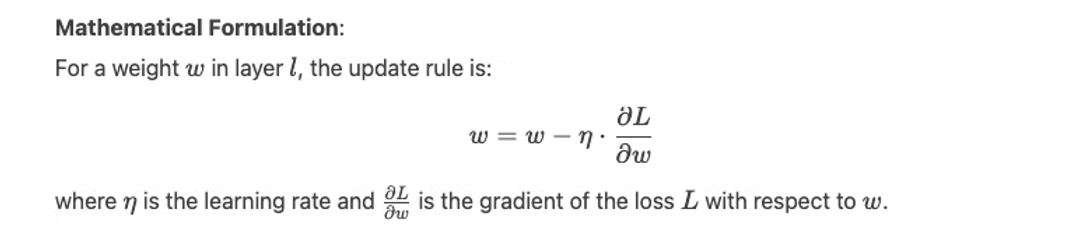

Detailed Answer:Gradient descent is an iterative optimization algorithm used to minimize a loss function by adjusting model parameters. Here’s how it works:

Initialize Parameters: Start with random values for the model parameters (e.g., weights in a neural network).

Compute Gradient: Calculate the gradient of the loss function with respect to each parameter. The gradient indicates the direction of the steepest ascent.

Update Parameters: Adjust the parameters in the opposite direction of the gradient to minimize the loss. The size of the step is controlled by the learning rate.

Repeat: Iterate until the loss converges to a minimum.

Types of Gradient Descent:

Batch Gradient Descent: Uses the entire dataset to compute the gradient. It’s accurate but computationally expensive.

Stochastic Gradient Descent (SGD): Uses a single data point to compute the gradient. It’s faster but noisier.

Mini-Batch Gradient Descent: Uses a small batch of data to compute the gradient. It balances speed and accuracy.

Example:In linear regression, gradient descent is used to minimize the mean squared error (MSE) by adjusting the slope and intercept of the line.Tip: Be ready to discuss challenges like local minima, saddle points, and the importance of learning rate tuning.

8. What is regularization, and why is it important?

Why it’s asked: Regularization is a key technique to prevent overfitting, and this question evaluates your understanding of model generalization.

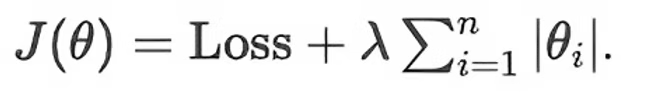

Detailed Answer:Regularization is a technique used to prevent overfitting by adding a penalty term to the loss function. This penalty discourages the model from fitting the noise in the training data.Types of Regularization:

L1 Regularization (Lasso):

Adds the absolute value of the coefficients as a penalty term.

Encourages sparsity, meaning some coefficients can become exactly zero.

Formula:

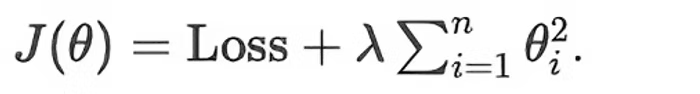

L2 Regularization (Ridge):

Adds the squared magnitude of the coefficients as a penalty term.

Shrinks coefficients but doesn’t set them to zero.

Formula:

Elastic Net:

Combines L1 and L2 regularization.

Useful when there are correlated features.

Why Regularization is Important:

Prevents overfitting by controlling model complexity.

Improves generalization to unseen data.

Helps handle multicollinearity in regression models.

Example:In a linear regression model, L2 regularization can shrink the coefficients of less important features, reducing their impact on predictions.

9. What is cross-validation, and how does it work?

Why it’s asked: Cross-validation is a critical technique for model evaluation, and this question tests your understanding of how to assess model performance.

Detailed Answer:Cross-validation is a technique used to evaluate the performance of a model by partitioning the data into multiple subsets and training/testing the model on different combinations of these subsets.Steps in k-Fold Cross-Validation:

Split the dataset into k equal-sized folds.

For each fold:

Use the fold as the validation set.

Use the remaining k−1 folds as the training set.

Train the model and evaluate its performance on the validation set.

Average the performance metrics across all folds to get the final evaluation.

Advantages:

Provides a more reliable estimate of model performance than a single train-test split.

Reduces the risk of overfitting by using all data for both training and validation.

Example:For a dataset with 1000 samples and

k=5

k=5, each fold contains 200 samples. The model is trained and validated 5 times, each time using a different fold as the validation set.Tip: Use stratified k-fold cross-validation for imbalanced datasets to ensure each fold has a representative distribution of classes.

10. What is the difference between bagging and boosting?

Why it’s asked: This question evaluates your understanding of ensemble methods, which are widely used in machine learning.

Detailed Answer:Bagging and boosting are ensemble techniques that combine multiple models to improve performance, but they work in different ways:Bagging (Bootstrap Aggregating):

How it works: Trains multiple models independently on different subsets of the training data (sampled with replacement) and averages their predictions.

Goal: Reduces variance and prevents overfitting.

Example: Random Forest, which combines multiple decision trees.

Boosting:

How it works: Trains models sequentially, with each model focusing on the errors made by the previous one.

Goal: Reduces bias and improves accuracy.

Example: AdaBoost and Gradient Boosting Machines (GBM).

Key Differences:

Model Training: Bagging trains models in parallel, while boosting trains them sequentially.

Error Focus: Bagging reduces variance, while boosting reduces bias.

Performance: Boosting often achieves higher accuracy but is more prone to overfitting.

Example:

Bagging: Random Forest for classification tasks.

Boosting: XGBoost for winning Kaggle competitions.

Tip: Use bagging for high-variance models (e.g., deep decision trees) and boosting for high-bias models (e.g., shallow trees).

Category 3: Deep Learning and Neural Networks

11. What is backpropagation, and how does it work?

Why it’s asked: Backpropagation is the foundation of training neural networks, and this question tests your understanding of how neural networks learn.

Detailed Answer:Backpropagation is an algorithm used to train neural networks by minimizing the loss function. It works by propagating the error backward through the network and updating the weights using gradient descent.Steps:

Forward Pass: Compute the output of the network for a given input.

Compute Loss: Calculate the difference between the predicted output and the actual target using a loss function (e.g., mean squared error).

Backward Pass: Compute the gradient of the loss with respect to each weight using the chain rule of calculus.

Update Weights: Adjust the weights in the opposite direction of the gradient to minimize the loss.

Example:In a simple neural network with one hidden layer, backpropagation computes the gradients for the weights between the input and hidden layers and between the hidden and output layers.Tip: Be ready to discuss challenges like vanishing gradients and how techniques like ReLU activation functions address them.

12. What is the difference between CNNs and RNNs?

Why it’s asked: This question evaluates your understanding of different neural network architectures and their applications.

Detailed Answer:Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are two of the most widely used neural network architectures, each designed for specific types of data:CNNs:

Purpose: Designed for spatial data, such as images.

Key Features:

Uses convolutional layers to extract spatial hierarchies of features (e.g., edges, textures).

Employs pooling layers to reduce dimensionality and computational complexity.

Typically followed by fully connected layers for classification or regression.

Applications: Image classification, object detection, and facial recognition.

RNNs:

Purpose: Designed for sequential data, such as time series or text.

Key Features:

Uses recurrent layers to capture temporal dependencies by maintaining a hidden state.

Can process variable-length sequences.

Variants like LSTMs and GRUs address the vanishing gradient problem.

Applications: Language modeling, machine translation, and speech recognition.

Example:

CNN: Classifying images of cats and dogs.

RNN: Predicting the next word in a sentence.

Tip: Be ready to discuss specific layers (e.g., convolutional, pooling, LSTM) and their roles in each architecture.

13. What is attention mechanism in neural networks?

Why it’s asked: Attention mechanisms are a key advancement in deep learning, and this question tests your understanding of how they improve model performance.

Detailed Answer:Attention mechanisms allow neural networks to focus on specific parts of the input when making predictions, improving their ability to handle long-range dependencies and complex patterns.How it Works:

Compute Attention Scores: For each element in the input sequence, compute a score that represents its importance relative to other elements.

Compute Attention Weights: Apply a softmax function to the scores to obtain weights that sum to 1.

Compute Context Vector: Multiply the input elements by their corresponding weights and sum the results to produce a context vector.

Use Context Vector: The context vector is used as input to the next layer or for making predictions.

Types of Attention:

Self-Attention: Used in transformer models, where the input sequence attends to itself.

Multi-Head Attention: Uses multiple attention mechanisms in parallel to capture different aspects of the input.

Example:In machine translation, an attention mechanism allows the model to focus on relevant words in the source sentence when generating each word in the target sentence.Tip: Be ready to discuss the transformer architecture and how attention mechanisms have revolutionized NLP.

14. What is transfer learning, and how is it used in deep learning?

Why it’s asked: Transfer learning is a powerful technique for leveraging pre-trained models, and this question evaluates your understanding of its applications.

Detailed Answer:Transfer learning involves using a pre-trained model as a starting point for a new task. Instead of training a model from scratch, you fine-tune the pre-trained model on your specific dataset.Steps:

Choose a Pre-Trained Model: Select a model trained on a large dataset (e.g., ImageNet for images or BERT for text).

Freeze Layers: Freeze the early layers of the model to retain their learned features.

Replace Final Layers: Replace the final layers with new ones tailored to your task (e.g., a new classification layer).

Fine-Tune: Train the model on your dataset, updating only the new layers or a subset of the pre-trained layers.

Advantages:

Reduces training time and computational cost.

Improves performance, especially when you have limited data.

Leverages knowledge learned from large datasets.

Example:

Using a pre-trained ResNet model for image classification and fine-tuning it on a custom dataset of medical images.

Fine-tuning BERT for sentiment analysis on customer reviews.

Tip: Be ready to discuss when to freeze layers and how to choose a pre-trained model for your task.

15. What is the difference between supervised and unsupervised learning?

Why it’s asked: This question tests your understanding of fundamental machine learning paradigms.

Detailed Answer:Supervised and unsupervised learning are two main types of machine learning, each with distinct approaches and applications:Supervised Learning:

Definition: The model learns from labeled data, where each input has a corresponding output.

Goal: Learn a mapping from inputs to outputs.

Examples:

Classification: Predicting whether an email is spam or not.

Regression: Predicting house prices based on features like size and location.

Algorithms: Linear regression, logistic regression, support vector machines (SVMs), and neural networks.

Unsupervised Learning:

Definition: The model learns from unlabeled data, where only inputs are provided.

Goal: Discover hidden patterns or structures in the data.

Examples:

Clustering: Grouping customers based on purchasing behavior.

Dimensionality Reduction: Reducing the number of features while preserving important information (e.g., PCA).

Algorithms: K-means clustering, hierarchical clustering, and autoencoders.

Key Differences:

Data: Supervised learning uses labeled data, while unsupervised learning uses unlabeled data.

Objective: Supervised learning focuses on prediction, while unsupervised learning focuses on discovery.

Evaluation: Supervised learning uses metrics like accuracy and F1-score, while unsupervised learning uses metrics like silhouette score and inertia.

Example:

Supervised: Predicting customer churn using historical data.

Unsupervised: Segmenting customers into groups based on their behavior.

Tip: Be ready to discuss semi-supervised learning, which combines both approaches.

Category 4: Applied Machine Learning and Case Studies

16. How would you build a recommendation system for Instagram?

Why it’s asked: This question evaluates your ability to apply machine learning to real-world problems, a key skill for roles at Meta.

Detailed Answer:Building a recommendation system for Instagram involves several steps, from data collection to model deployment:Steps:

Data Collection:

Gather user interaction data (e.g., likes, comments, shares, and time spent on posts).

Collect metadata about posts (e.g., hashtags, captions, and image features).

Feature Engineering:

Extract features from images using CNNs (e.g., ResNet).

Use NLP techniques to analyze captions and hashtags.

Create user profiles based on their interaction history.

Model Selection:

Use collaborative filtering to recommend posts based on user similarity.

Implement matrix factorization techniques like Singular Value Decomposition (SVD).

Use deep learning models like neural collaborative filtering (NCF) or transformer-based models for more advanced recommendations.

Evaluation:

Use metrics like precision, recall, and mean average precision (MAP) to evaluate the model.

Conduct A/B testing to measure the impact of recommendations on user engagement.

Deployment:

Deploy the model in a scalable environment using tools like TensorFlow Serving or PyTorch Serve.

Continuously monitor and update the model based on user feedback.

Example:A hybrid recommendation system that combines collaborative filtering (based on user interactions) and content-based filtering (based on post features) to recommend posts to users.Tip: Be ready to discuss challenges like cold start (for new users or posts) and scalability.

17. How would you detect fake news on Facebook?

Why it’s asked: This question tests your problem-solving skills and ability to apply machine learning to real-world challenges.

Detailed Answer:Detecting fake news involves analyzing text, metadata, and user behavior to identify misleading content:Steps:

Data Collection:

Gather news articles, social media posts, and user interactions (e.g., shares, comments).

Collect metadata like source credibility and author information.

Feature Engineering:

Use NLP techniques to extract features from text (e.g., sentiment analysis, topic modeling).

Analyze linguistic patterns (e.g., sensational language, excessive use of caps).

Use graph-based features to analyze the spread of information (e.g., how quickly a post is shared).

Model Selection:

Use supervised learning models like logistic regression or gradient boosting for classification.

Implement deep learning models like BERT for text analysis.

Use graph neural networks (GNNs) to analyze the spread of fake news.

Evaluation:

Use metrics like precision, recall, and F1-score to evaluate the model.

Conduct A/B testing to measure the impact of fake news detection on user engagement.

Deployment:

Deploy the model in a real-time system to flag potentially fake news.

Continuously update the model based on new data and user feedback.

Example:A system that uses BERT to analyze the content of news articles and a GNN to analyze how the article is shared across the platform.Tip: Be ready to discuss ethical considerations, such as avoiding bias and ensuring transparency.

18. How would you optimize Facebook’s newsfeed algorithm?

Why it’s asked: This question evaluates your understanding of ranking algorithms and personalization, which are critical for Meta’s products.

Detailed Answer:Optimizing Facebook’s newsfeed algorithm involves balancing relevance, diversity, and user engagement:Steps:

Data Collection:

Gather data on user interactions (e.g., likes, comments, shares, and time spent on posts).

Collect metadata about posts (e.g., type, source, and recency).

Feature Engineering:

Extract features from posts (e.g., text, images, and videos).

Create user profiles based on their interaction history and preferences.

Model Selection:

Use reinforcement learning to optimize for long-term user engagement.

Implement ranking models like Learning to Rank (LTR) to prioritize posts.

Use A/B testing to evaluate different ranking strategies.

Evaluation:

Use metrics like click-through rate (CTR), dwell time, and user satisfaction.

Conduct A/B testing to measure the impact of changes on user engagement.

Deployment:

Deploy the optimized algorithm in a scalable environment.

Continuously monitor and update the algorithm based on user feedback.

Example:A reinforcement learning model that balances showing relevant posts with introducing new content to keep users engaged.Tip: Be ready to discuss tradeoffs between relevance and diversity.

19. How would you predict ad click-through rates (CTR) on Facebook?

Why it’s asked: This question evaluates your ability to work with large-scale data and build predictive models for real-world applications.

Detailed Answer:Predicting ad CTR involves analyzing user behavior, ad content, and contextual features to estimate the likelihood of a user clicking on an ad.Steps:

Data Collection:

Gather historical data on ad impressions, clicks, and user interactions.

Collect metadata about ads (e.g., text, images, and target audience).

Include contextual features like time of day, device type, and user demographics.

Feature Engineering:

Extract features from ad content using NLP and computer vision techniques.

Create user profiles based on their interaction history and preferences.

Encode categorical features (e.g., ad category, user location) using techniques like one-hot encoding or embeddings.

Model Selection:

Use supervised learning models like logistic regression or gradient boosting for binary classification.

Implement deep learning models like neural networks for more complex patterns.

Use techniques like feature importance analysis to identify key predictors of CTR.

Evaluation:

Use metrics like AUC-ROC, log loss, and precision-recall to evaluate the model.

Conduct A/B testing to measure the impact of predicted CTR on ad performance.

Deployment:

Deploy the model in a real-time system to predict CTR for new ads.

Continuously update the model based on new data and user feedback.

Example:A gradient boosting model that predicts CTR based on ad content, user demographics, and contextual features like time of day.Tip: Be ready to discuss challenges like class imbalance (low CTR) and how to handle them (e.g., oversampling, class weighting).

20. How would you handle imbalanced data in a classification problem?

Why it’s asked: This question tests your understanding of data preprocessing and model evaluation, which are critical for real-world ML applications.

Detailed Answer:Imbalanced data occurs when one class is significantly underrepresented, leading to biased models. Here’s how to handle it:Techniques:

Resampling:

Oversampling: Increase the number of samples in the minority class (e.g., using SMOTE).

Undersampling: Reduce the number of samples in the majority class.

Class Weighting:

Assign higher weights to the minority class during model training to penalize misclassifications more heavily.

Data Augmentation:

Generate synthetic samples for the minority class using techniques like data augmentation (e.g., flipping images for computer vision tasks).

Algorithm Selection:

Use algorithms that are robust to imbalanced data, such as decision trees, random forests, or gradient boosting.

Evaluation Metrics:

Use metrics like precision, recall, F1-score, and AUC-PR instead of accuracy, which can be misleading for imbalanced datasets.

Example:In a fraud detection problem where fraudulent transactions are rare, you could use SMOTE to oversample the minority class and train a random forest model with class weighting.Tip: Be ready to discuss the tradeoffs between different techniques (e.g., oversampling vs. undersampling).

Category 5: Behavioral and Meta-Specific Questions

21. Tell me about a time you faced a challenging technical problem and how you solved it.

Why it’s asked: This question evaluates your problem-solving skills, technical expertise, and ability to communicate effectively.

Detailed Answer:Use the STAR (Situation, Task, Action, Result) method to structure your response:Situation:

Describe the context of the problem (e.g., a project, deadline, or team setting).

Task:

Explain your role and the specific challenge you faced.

Action:

Detail the steps you took to address the problem (e.g., research, collaboration, or experimentation).

Result:

Share the outcome and impact of your solution (e.g., improved performance, reduced costs, or met deadlines).

Example:

Situation: During a hackathon, our team was tasked with building a recommendation system in 48 hours.

Task: I was responsible for implementing the collaborative filtering algorithm, but we faced issues with scalability.

Action: I researched matrix factorization techniques and implemented an SVD-based approach, which significantly improved performance.

Result: Our solution won second place, and the judges praised its scalability and accuracy.

Tip: Focus on a problem that highlights your technical skills and ability to work under pressure.

22. How do you stay updated with the latest advancements in ML?

Why it’s asked: This question tests your passion for learning and staying current in a rapidly evolving field.

Detailed Answer:Staying updated in ML requires a combination of reading, experimentation, and networking:Resources:

Research Papers: Read papers from top conferences like NeurIPS, ICML, and CVPR.

Blogs and Newsletters: Follow blogs like Towards Data Science, KDnuggets, and newsletters like The Batch by DeepLearning.AI.

Online Courses: Take courses on platforms like Coursera, edX, and Fast.ai.

Open Source Projects: Contribute to or explore projects on GitHub.

Networking: Attend meetups, webinars, and conferences to connect with other professionals.

Example:

“I recently read a paper on transformer-based models and implemented a BERT model for a sentiment analysis project. I also attended a webinar on federated learning, which gave me new ideas for improving data privacy in our models.”

Tip: Be specific about recent advancements you’ve explored and how you’ve applied them.

23. Why do you want to work at Meta?

Why it’s asked: This question evaluates your alignment with Meta’s mission and culture.

Detailed Answer:Highlight Meta’s impact on AI and your desire to contribute to meaningful projects:Points to Include:

Innovation: Mention Meta’s cutting-edge work in AI, AR/VR, and social media.

Impact: Discuss how Meta’s products (e.g., Facebook, Instagram) impact billions of users worldwide.

Culture: Emphasize Meta’s collaborative and fast-paced environment.

Personal Connection: Share how your skills and interests align with Meta’s goals.

Example:

“I’m inspired by Meta’s work on AI-driven products like Instagram’s recommendation system and Facebook’s newsfeed algorithm. I want to contribute to projects that leverage AI to connect people and improve their experiences. Meta’s culture of innovation and collaboration aligns perfectly with my values and career goals.”

Tip: Be genuine and show enthusiasm for Meta’s mission.

24. How do you handle disagreements within a team?

Why it’s asked: This question tests your teamwork and conflict resolution skills.

Detailed Answer:Use the STAR method to structure your response:Situation:

Describe a situation where you faced a disagreement (e.g., a project decision or approach).

Task:

Explain your role and the nature of the disagreement.

Action:

Detail how you addressed the disagreement (e.g., active listening, compromise, or data-driven decision-making).

Result:

Share the outcome and how it strengthened the team.

Example:

Situation: During a team project, we disagreed on the choice of algorithm for a classification task.

Task: I advocated for a random forest model, while my teammate preferred a neural network.

Action: We conducted a small experiment to compare the performance of both models and presented the results to the team.

Result: The team agreed to use the random forest model, which performed better and was easier to interpret.

Tip: Emphasize collaboration and a focus on team success.

25. What is your approach to working on long-term projects?

Why it’s asked: This question evaluates your project management and perseverance.

Detailed Answer:Highlight your organizational skills and ability to stay motivated:Steps:

Break Down the Project: Divide the project into smaller milestones with clear goals.

Set Priorities: Focus on high-impact tasks and manage dependencies.

Track Progress: Use tools like Jira or Trello to monitor progress and adjust plans as needed.

Stay Motivated: Celebrate small wins and maintain a growth mindset.

Example:

“In my last role, I worked on a year-long project to build a recommendation system. I broke the project into phases (data collection, model development, and deployment) and set quarterly goals. Regular check-ins with my team helped us stay on track, and we successfully launched the system ahead of schedule.”

Tip: Showcase your ability to deliver results over the long term.

Tips to Ace Meta’s ML Interviews

Practice Coding Daily: Use platforms like LeetCode and InterviewNode to sharpen your skills.

Master ML Fundamentals: Review key concepts like bias-variance tradeoff, regularization, and evaluation metrics.

Work on Real-World Projects: Build a portfolio of ML projects to showcase your skills.

Prepare for Behavioral Questions: Use the STAR method to structure your responses.

Leverage InterviewNode: Our platform offers personalized mock interviews, coding challenges, and ML case studies to help you prepare effectively.

Conclusion

Preparing for a machine learning interview at Meta can be daunting, but with the right approach and resources, you can crack it. By mastering the top 25 questions we’ve covered in this blog, you’ll be well on your way to acing your interview. Remember, consistent practice and a deep understanding of ML concepts are key to success.

At InterviewNode, we’re committed to helping you achieve your career goals. Sign up for our free webinar today to take the first step toward landing your dream job at Meta. Good luck!