1. Introduction to Ensemble Learning

One technique that consistently stands out in ML for enhancing model performance is ensemble learning. Whether you're working on a classification problem, like identifying fraudulent transactions, or a regression problem, such as predicting house prices, ensemble methods can help you achieve superior accuracy and robustness. But what exactly is ensemble learning, and why is it so effective? In this guide, we’ll break down key ensemble learning techniques that make these gains possible.

What is Ensemble Learning?

At its core, ensemble learning combines multiple machine learning models—often called weak learners—into a single strong learner. The underlying idea is that while individual models may not perform perfectly on their own, when their predictions are aggregated, the combined model often delivers better results. This technique works by reducing errors like variance and bias, which are the two primary sources of inaccuracy in machine learning models.

Let’s break down these key concepts:

Variance refers to how much a model’s predictions fluctuate with changes in the training data. Models like decision trees are prone to high variance, which can lead to overfitting. By averaging multiple models, ensemble methods like bagging can reduce variance.

Bias is the error introduced when a model is too simplistic, leading to underfitting. Techniques like boosting work to reduce bias by sequentially improving weak models.

Ensemble learning is powerful because it addresses these errors, creating models that are more accurate, stable, and generalizable. As a result, it’s no surprise that ensemble methods are widely used in high-stakes applications like credit scoring, fraud detection, healthcare predictions, and more.

Why Use Ensemble Learning?

The primary reason to use ensemble learning is to boost predictive performance. While a single decision tree or neural network can work well on certain tasks, it might fall short on complex datasets where small errors compound. Ensemble methods help by balancing the strengths and weaknesses of multiple models.

Additionally, ensemble models can help tackle class imbalances—a common challenge in machine learning where one class is overrepresented in the data (for example, detecting fraud in financial transactions, where the vast majority of transactions are legitimate). Boosting algorithms, like AdaBoost and Gradient Boosting, are particularly effective in handling imbalanced datasets by focusing on hard-to-classify examples.

Overview of Bagging, Boosting, and Stacking

There are several types of ensemble techniques, but the three most widely used in practice are Bagging, Boosting, and Stacking. Each of these methods uses a different approach to model training and prediction:

Bagging trains multiple models independently in parallel and averages their predictions. Its goal is to reduce variance by aggregating predictions from multiple weak models trained on different subsets of the data.

Boosting trains models sequentially, with each model focusing on correcting the errors made by its predecessor. Boosting is designed to reduce bias by focusing on the hardest-to-predict data points.

Stacking combines different models, often of different types, and uses a meta-learner to blend their outputs for improved accuracy.

In the following sections, we’ll dive deeper into how each of these methods works and when to use them to maximize the performance of your machine learning models.

2. What is Bagging?

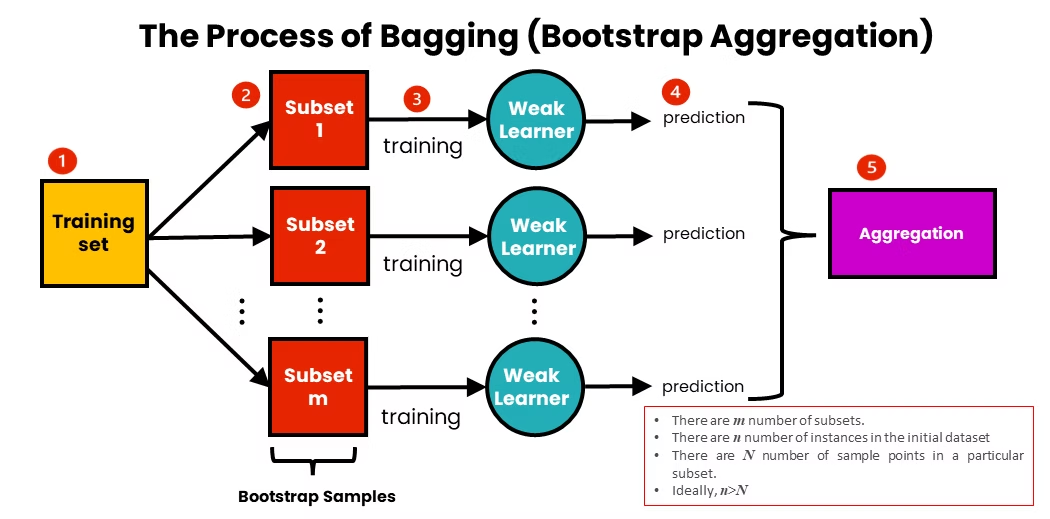

Bagging, short for Bootstrap Aggregating, is one of the most popular ensemble learning techniques used to reduce the variance of machine learning models. Developed by Leo Breiman in the 1990s, Bagging is particularly useful for models that tend to overfit the data, such as decision trees. Its primary goal is to create more robust and generalized models by averaging predictions from multiple weak learners.

How Bagging Works

Bagging works by generating multiple versions of a dataset through a process called bootstrapping, and then training a model on each version. The key idea is to create slightly different training datasets by randomly sampling from the original data with replacement. This means that some data points will be used more than once, while others might be left out. By doing this, Bagging creates a diverse set of models, each trained on a different subset of the data, which helps reduce the risk of overfitting.

Here's a step-by-step breakdown of the Bagging process:

Bootstrap Sampling: From the original training dataset, multiple random samples are created, each with the same size as the original dataset but generated by random sampling with replacement.

Training Multiple Models: A separate model is trained on each bootstrap sample. For example, if Bagging is used with decision trees, each model will be a decision tree trained on a different subset of the data.

Combining Predictions: Once the models are trained, their predictions are combined. For classification problems, the final prediction is usually determined by a majority vote (i.e., the class that most models predict). For regression tasks, the final prediction is the average of the individual model predictions.

This combination of models leads to a reduction in variance, as the randomness introduced by bootstrapping ensures that the models are less correlated with one another. Bagging excels at creating a stable and reliable model, especially when dealing with high-variance models such as decision trees.

Random Forest: A Bagging Example

One of the most famous applications of Bagging is the Random Forest algorithm, which is essentially an ensemble of decision trees. In a Random Forest, multiple decision trees are trained on different bootstrapped datasets, and each tree makes predictions independently. These predictions are then aggregated to form the final output.

What sets Random Forest apart is that, in addition to bootstrapping the data, it also selects a random subset of features for each tree, further increasing the diversity among the trees and reducing the likelihood of overfitting.

Key steps of Random Forest:

Random Sampling of Data: Bootstrapped samples of the data are used to train each decision tree.

Random Feature Selection: Instead of considering all features at each split, Random Forest only looks at a random subset of features. This leads to a more diverse set of trees.

Majority Voting (Classification) or Averaging (Regression): The predictions from all the decision trees are combined by voting (for classification) or averaging (for regression) to make the final prediction.

Random Forest has become a go-to algorithm for many machine learning tasks, particularly when working with tabular data. Its ability to handle large datasets, manage missing values, and reduce overfitting makes it incredibly versatile.

Advantages of Bagging

Reduction in Variance: By averaging predictions across multiple models, Bagging helps reduce the variance, making the final model more stable and less likely to overfit the training data.

Robustness: Since Bagging creates a more generalized model, it performs better on unseen data.

Parallelization: Bagging can train models independently, making it easy to parallelize the process and handle large datasets efficiently.

Limitations of Bagging

Less Effective for Bias Reduction: While Bagging is excellent for reducing variance, it doesn’t directly address bias. If the base model is highly biased, Bagging will not improve its performance significantly.

Computational Cost: Training multiple models, especially when the base learners are complex (e.g., deep decision trees), can be computationally expensive, though this can be mitigated by parallelization.

Real-World Applications of Bagging

Bagging, and especially Random Forest, has found widespread use in real-world applications where accuracy and stability are crucial:

Fraud Detection: In financial services, Bagging is often used to detect fraudulent transactions. By using Random Forests, companies can improve their ability to identify suspicious activities while reducing false positives.

Credit Scoring: Lenders use Bagging to predict the likelihood of loan defaults by analyzing historical loan data. Random Forest's robustness makes it ideal for handling noisy, complex datasets in this domain.

Healthcare: Bagging techniques are also applied in healthcare for disease prediction and outcome forecasting, where reducing overfitting is critical for making reliable predictions.

3. What is Boosting?

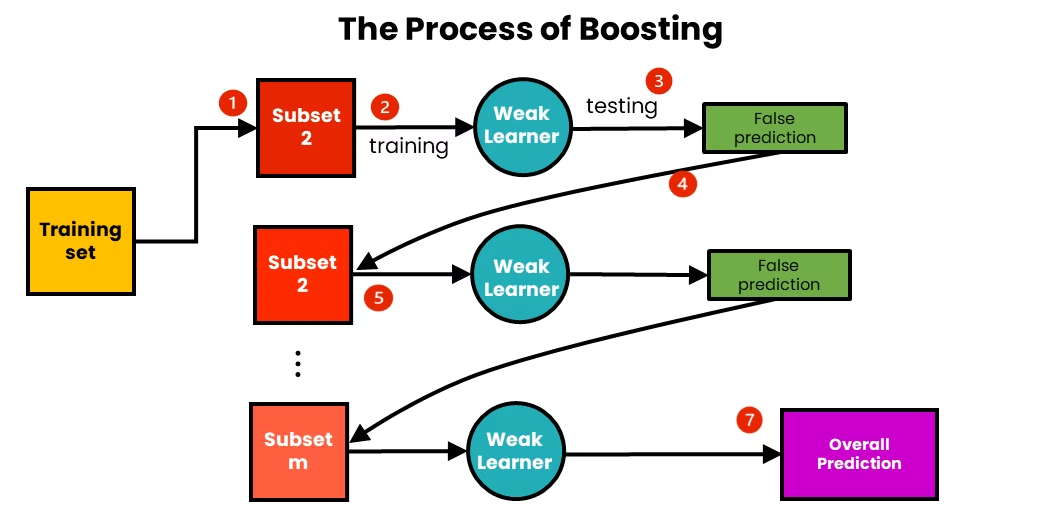

Boosting is another powerful ensemble learning technique, but it takes a fundamentally different approach from Bagging. While Bagging reduces variance by averaging multiple models trained in parallel, Boosting focuses on reducing bias. Boosting does this by sequentially training models, with each new model attempting to correct the errors made by the previous ones. This iterative process leads to the creation of a strong learner from many weak ones.

Boosting shines in scenarios where the base model is too simple to capture the underlying patterns in the data. By focusing more on the examples that are harder to classify correctly, boosting improves the performance of these weak models, making the ensemble much more accurate.

How Boosting Works

The main idea behind Boosting is to sequentially train weak learners, where each learner focuses on the mistakes of its predecessor. The general workflow of Boosting involves the following steps:

Initial Model Training: The process starts by training a weak model (e.g., a shallow decision tree) on the entire dataset. This model makes predictions, but since it’s a weak learner, it’s likely to misclassify some instances.

Error Weighting: Boosting assigns higher weights to the data points that were misclassified by the previous model, so the next model pays more attention to them. This encourages the next model to focus on the "harder" examples in the dataset.

Sequential Model Training: A new weak learner is trained, this time on the weighted data where misclassified examples carry more importance. The new model tries to correct the errors made by the first one.

Final Prediction: Once all the weak learners are trained, their predictions are combined. Unlike Bagging, where all models are weighted equally, Boosting assigns higher weights to models that perform better, and the final prediction is a weighted sum of the individual model predictions.

This sequential and adaptive nature of Boosting helps it outperform other methods in many cases, especially in scenarios where high accuracy is critical, such as healthcare or finance.

Key Boosting Algorithms

There are several algorithms under the Boosting umbrella, each with unique advantages. Let's explore the most commonly used ones:

AdaBoost (Adaptive Boosting)

AdaBoost, or Adaptive Boosting, was the first successful implementation of Boosting, designed primarily for binary classification problems. It works by adjusting the weights of misclassified examples after each round of learning. AdaBoost builds a series of models (often decision trees), each correcting the mistakes of the previous one, and then combines them to form a strong classifier.

How AdaBoost works:

Initially, all instances in the dataset are given equal weights.

After training the first model, AdaBoost increases the weights of the misclassified instances.

Subsequent models focus more on these difficult-to-classify instances, leading to improvements over time.

The final prediction is a weighted vote based on the performance of each model.

Advantages of AdaBoost:

Simple and effective for binary classification tasks.

Works well with weak learners, particularly decision trees with a single split (often referred to as decision stumps).

Easily implemented with Scikit-learn's AdaBoostClassifier in Python.

Limitations:

Sensitive to noisy data and outliers. Since misclassified instances are given more weight, AdaBoost can focus too much on outliers, which may degrade overall performance.

Gradient Boosting

Gradient Boosting is a more advanced version of Boosting that focuses on minimizing the residual error from previous models. Instead of adjusting weights like AdaBoost, Gradient Boosting tries to fit a new model that minimizes the difference between the true values and the predictions of all the previous models combined. It uses a gradient descent algorithm to optimize the loss function (e.g., mean squared error for regression tasks).

How Gradient Boosting works:

A weak learner is first trained on the dataset, and its residual errors (the difference between actual and predicted values) are computed.

The next model is trained to predict these residuals, effectively correcting the errors of the previous model.

This process is repeated, with each model focusing on reducing the residuals left by its predecessors.

Gradient Boosting has given rise to many efficient implementations, with XGBoost and LightGBM being the most notable. These frameworks have become widely popular in data science competitions due to their ability to handle large datasets and provide top-tier performance.

XGBoost

XGBoost (Extreme Gradient Boosting) is a highly optimized version of Gradient Boosting designed for speed and performance. It offers features like regularization (to prevent overfitting), parallelization, and efficient handling of missing data, making it a top choice for competitive machine learning.

Advantages:

Handles large datasets and complex models efficiently.

Provides better control over overfitting with regularization techniques.

Supports parallel processing, making it much faster than traditional Gradient Boosting implementations.

Advantages of Boosting

Reduces Bias: Boosting is excellent at reducing bias by turning weak learners into a strong ensemble. This makes it a great choice when your model struggles with underfitting.

Improves Accuracy: By focusing on misclassified data points and refining the model sequentially, Boosting often outperforms other methods in terms of accuracy.

Handles Imbalanced Datasets: Boosting is particularly good at handling imbalanced datasets, as it concentrates on difficult-to-classify examples.

Limitations of Boosting

Sensitive to Overfitting: Since Boosting gives more weight to hard-to-classify examples, it can sometimes overfit to noise or outliers in the dataset, especially if not properly regularized.

Computational Complexity: Boosting requires sequential training, which means it is harder to parallelize and can be slower than Bagging methods, particularly on large datasets.

Real-World Applications of Boosting

Boosting has found wide application in various fields due to its ability to handle complex datasets and deliver high accuracy. Some common use cases include:

Healthcare Predictions: Boosting algorithms are used to predict patient outcomes, classify diseases, and improve medical diagnoses by focusing on harder-to-classify cases.

Marketing and Customer Segmentation: Gradient Boosting algorithms are employed to identify customer segments based on purchasing behavior, demographics, and preferences, helping companies target marketing efforts more effectively.

Finance: In credit scoring and risk assessment, Boosting algorithms help improve the accuracy of predicting loan defaults and assessing creditworthiness.

4. What is Stacking?

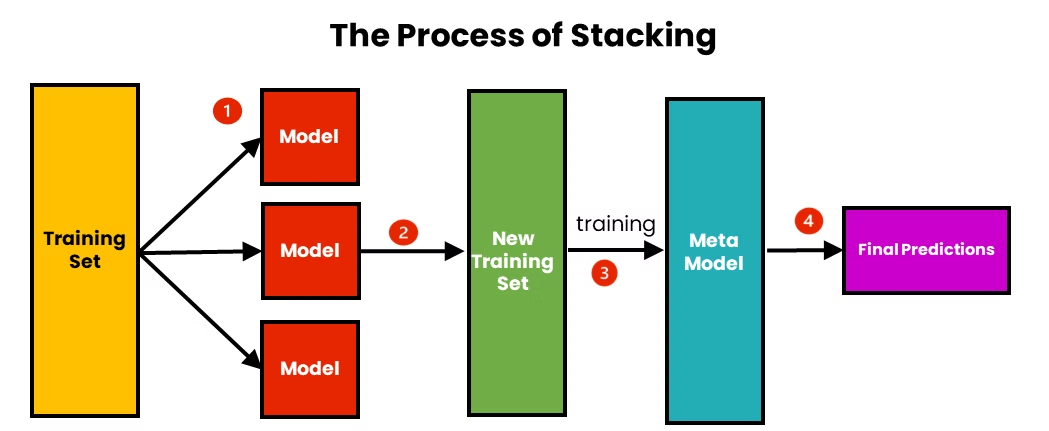

Stacking, or Stacked Generalization, is a more advanced ensemble learning technique that differs from Bagging and Boosting in that it combines predictions from multiple heterogeneous models. While Bagging and Boosting typically use a collection of similar models (e.g., decision trees), Stacking takes a more diverse approach by leveraging models of different types—such as decision trees, support vector machines (SVMs), and neural networks. These models, trained independently, then have their outputs combined by a meta-learner to produce a final prediction.

In Stacking, the focus is on blending models of various strengths to maximize predictive accuracy, often leading to better results than individual models or homogeneous ensembles. This technique is frequently used in machine learning competitions like Kaggle, where high accuracy is essential, and optimizing multiple models together can offer a performance edge.

How Stacking Works

The Stacking process involves two layers:

Base Models: A set of different models (the base learners) is trained independently on the training data. These models can be of different types, such as decision trees, linear regression, SVMs, or neural networks. Each model generates its predictions on the validation set.

Meta-Learner: The predictions from the base models are used as input to a higher-level model called the meta-learner (often a simpler model like logistic regression). The meta-learner is trained to combine the predictions from the base models and make the final prediction.

For example, in a classification problem, you might train a decision tree, a k-nearest neighbors (KNN) model, and an SVM as your base models. These models will make predictions on the validation data, and their outputs (the predicted probabilities or classes) will be fed into the meta-learner. The meta-learner will then make the final decision based on these inputs.

Key steps in the Stacking process:

Train several base models on the training data.

Generate predictions from the base models on a holdout (validation) set.

Train a meta-model using the predictions of the base models as input features.

Use the meta-model to make final predictions on the test data.

Why Use Stacking?

Stacking's strength comes from its ability to combine the unique insights provided by different algorithms. For example:

A decision tree may excel at capturing non-linear relationships in the data, but it could overfit.

A linear model like logistic regression may generalize better but might miss complex patterns.

An SVM might offer strong performance on smaller datasets but could struggle with large, noisy data.

By stacking these models together, you leverage their individual strengths and mitigate their weaknesses, leading to a more accurate and robust final prediction.

Meta-Learners in Stacking

The role of the meta-learner is crucial in Stacking, as it determines how well the outputs of the base models are combined. Common choices for the meta-learner include:

Logistic Regression: Often used for binary classification problems as a simple yet effective way to combine the base models' outputs.

Linear Regression: For regression problems, linear regression is commonly used as the meta-learner.

Gradient Boosting Machines (GBMs): More complex meta-learners like gradient boosting models can also be employed to maximize the ensemble's accuracy, especially in competitions where small improvements can make a significant difference.

Advantages of Stacking

Improved Accuracy: Stacking tends to perform better than Bagging or Boosting when well-implemented, as it leverages multiple algorithms’ strengths. By combining diverse models, Stacking can capture different aspects of the data that individual models might miss.

Flexibility: Unlike Bagging and Boosting, which usually rely on a specific model type (e.g., decision trees), Stacking allows for the combination of any type of model, providing flexibility and greater experimentation in choosing the best algorithms for the task.

Less Overfitting: Since the meta-learner is trained on the predictions of multiple base models, the risk of overfitting is reduced, provided that the models are properly tuned and validated.

Limitations of Stacking

Computational Complexity: Stacking can be computationally expensive since it involves training multiple models and a meta-learner. Depending on the size of the dataset and the complexity of the base models, the training time can increase significantly.

Difficult to Tune: Tuning a stacked ensemble can be more challenging than tuning simpler models. Finding the right balance between base models and the meta-learner requires extensive experimentation and cross-validation.

Risk of Overfitting: If not carefully tuned, Stacking can still lead to overfitting, particularly if the meta-learner becomes too complex or if the base models are not properly validated.

Real-World Applications of Stacking

Stacking is commonly used in scenarios where high accuracy is paramount and computational resources are less of a concern. Some notable applications include:

AI Competitions: Stacking is frequently employed in machine learning competitions, such as those on Kaggle, where competitors use multiple models to gain even slight improvements in accuracy.

Finance: In financial modeling, Stacking can be used to combine different risk models or trading strategies, leading to more reliable predictions of market trends.

Healthcare: Stacking has also been applied in healthcare for disease classification tasks, where combining models trained on different types of clinical data (e.g., patient records, genetic data) can provide better diagnostic accuracy.

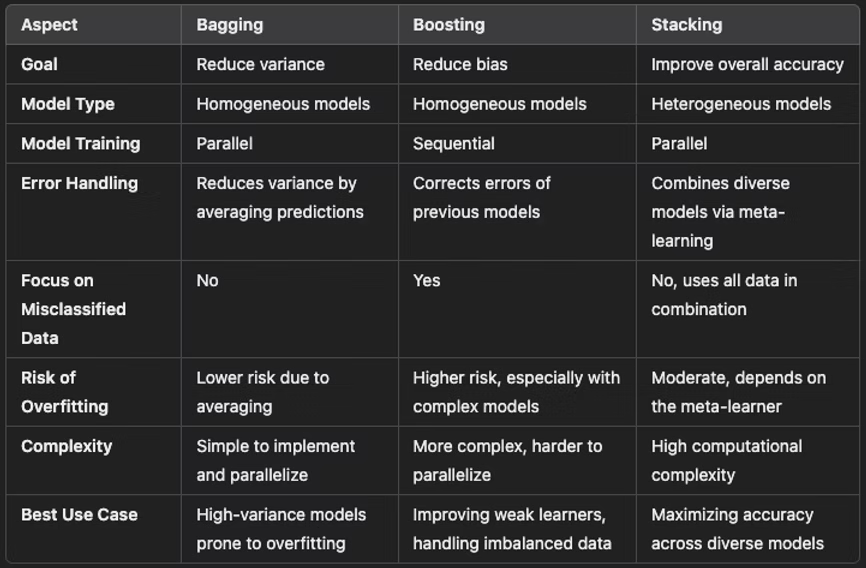

5. Bagging vs. Boosting vs. Stacking: A Comparative Analysis

In this section, we’ll compare Bagging, Boosting, and Stacking, highlighting their similarities, differences, and when to use each technique. Understanding the right context for these ensemble methods will help machine learning practitioners optimize their models for specific goals like reducing bias, lowering variance, or maximizing prediction accuracy.

Key Differences Between Bagging, Boosting, and Stacking

Bagging: When to Use It

Goal: Use Bagging when the primary problem is variance—for instance, when models like decision trees are prone to overfitting. Bagging is excellent for models that fluctuate heavily with changes in the training data.

Use Cases: Ideal for datasets where accuracy depends on reducing overfitting, such as in fraud detection, credit scoring, and bioinformatics. Random Forest, a bagging-based algorithm, is widely used in these areas.

Example Algorithms: Random Forest, Bagged Decision Trees.

Boosting: When to Use It

Goal: Boosting is used to reduce bias, particularly when individual models are too simplistic to capture complex patterns. Boosting sequentially improves models by focusing on the hardest-to-predict data points.

Use Cases: It’s best suited for highly imbalanced datasets (e.g., rare disease classification, fraud detection) and scenarios where precision and recall are critical. It’s often used in healthcare, marketing, and finance to make fine-tuned predictions.

Example Algorithms: AdaBoost, Gradient Boosting, XGBoost, LightGBM.

Stacking: When to Use It

Goal: Use Stacking to maximize accuracy by combining multiple models of different types. Stacking is most useful when individual models capture different patterns in the data.

Use Cases: Stacking is frequently employed in machine learning competitions, such as Kaggle, where participants combine multiple models (e.g., decision trees, SVMs, and neural networks) to squeeze out every last bit of predictive power. It is also used in high-stakes scenarios like financial market predictions and complex diagnostic tasks in healthcare.

Example Algorithms: Stacking can be used with any combination of models, such as Decision Trees, Neural Networks, and SVMs combined with a meta-learner.

Decision Framework for Choosing the Right Method

To help choose between these techniques, here’s a simple framework:

Use Bagging if: Your model has high variance and overfitting is a concern. Bagging is great when you need a stable, generalized model for prediction tasks like fraud detection or random forest-based classification.

Use Boosting if: Your model suffers from underfitting or bias. Boosting shines when you need to fine-tune predictions and improve weak models, especially in cases with imbalanced datasets or when you need higher precision.

Use Stacking if: You want to combine the strengths of different models for higher accuracy. Stacking is ideal when multiple models give varying predictions, and you need a meta-learner to integrate them for optimal results.

6. Real-World Applications of Ensemble Learning

Ensemble learning has revolutionized a variety of industries by improving predictive accuracy and model robustness. Here are some prominent real-world applications of Bagging, Boosting, and Stacking.

Finance and Banking

Credit Scoring: Financial institutions use Random Forest (Bagging) and Boosting algorithms like XGBoost to assess credit risk, predicting whether an individual will default on a loan. Ensemble learning helps improve accuracy by combining different models trained on customer history, credit data, and behavioral patterns.

Fraud Detection: In fraud detection, ensemble methods are used to identify unusual transactions. Bagging helps reduce false positives, while Boosting improves precision by focusing on hard-to-classify transactions. Models like Random Forest and Gradient Boosting are used to predict fraudulent activities.

Healthcare

Disease Prediction: Boosting techniques like XGBoost and LightGBM are widely used in healthcare to predict patient outcomes and classify diseases. For example, boosting algorithms help detect cancer in radiology images by refining predictions based on patient data.

Outcome Forecasting: Stacking is applied in outcome forecasting where multiple sources of patient data (genomic data, clinical records, etc.) are combined to generate more accurate health predictions. By stacking models like neural networks and decision trees, healthcare providers can better predict patient survival rates or treatment responses.

E-commerce and Marketing

Customer Segmentation: Marketers use Boosting to identify customer segments based on purchasing behavior, demographics, and preferences. By focusing on difficult-to-classify customers, boosting algorithms like Gradient Boosting help e-commerce platforms target their marketing efforts effectively.

Recommendation Systems: Stacking is employed in recommendation systems (e.g., Netflix, Amazon) where diverse models—like collaborative filtering, content-based algorithms, and neural networks—are combined to provide personalized product recommendations.

7. Interview Questions on Bagging, Boosting, and Stacking

For software engineers preparing for machine learning interviews at top companies, it’s important to be familiar with commonly asked questions about ensemble learning techniques. Below are sample interview questions along with brief explanations to help candidates prepare.

Bagging Interview Questions

What is Bagging and how does it reduce overfitting?

Answer: Bagging reduces overfitting by averaging predictions from multiple models trained on different bootstrapped datasets. It reduces variance, making the model more stable on unseen data.

How does Random Forest improve accuracy compared to a single decision tree?

Answer: Random Forest improves accuracy by averaging multiple decision trees, reducing variance while maintaining robustness, unlike a single decision tree, which may overfit the data.

In what scenarios would you prefer Bagging over Boosting?

Answer: Bagging is preferred when the model has high variance (e.g., decision trees) and you want to stabilize predictions, while Boosting is better for reducing bias in underfitting models.

Boosting Interview Questions

Can you explain how AdaBoost works?

Answer: AdaBoost adjusts the weights of misclassified data points after each round of learning, focusing subsequent models on harder-to-predict examples. The final model combines the weighted predictions of all weak learners.

What are the key differences between Gradient Boosting and XGBoost?

Answer: XGBoost is an optimized version of Gradient Boosting that introduces regularization to reduce overfitting and employs parallelization to handle large datasets more efficiently.

What are the risks of overfitting with Boosting, and how can you mitigate them?

Answer: Boosting can overfit when too many models are added, or if the data is noisy. To mitigate this, you can use regularization techniques or limit the depth of the trees used in each iteration.

Stacking Interview Questions

How does Stacking differ from Bagging and Boosting?

Answer: Stacking combines heterogeneous models (e.g., decision trees, SVMs) using a meta-learner, whereas Bagging and Boosting typically use homogeneous models. Stacking focuses on combining different types of models to improve accuracy.

Explain how a meta-learner works in a stacking ensemble.

Answer: The meta-learner is trained on the predictions of the base models, learning how to best combine their outputs to make the final prediction. It typically uses simple models like logistic regression to aggregate these outputs.

What are the computational challenges associated with Stacking?

Answer: Stacking can be computationally expensive due to the need to train multiple models and a meta-learner. This process also requires careful tuning to avoid overfitting.

8. Challenges and Future Directions of Ensemble Learning

Challenges

Computational Complexity: Ensemble methods, particularly Stacking and Boosting, can be computationally intensive. Training multiple models or sequential models (in the case of Boosting) requires significant resources,as it involves training multiple models independently or sequentially. This leads to slower runtimes, especially on large datasets. Stacking adds an extra layer of complexity since it requires both base models and a meta-learner to be trained and tuned.

Overfitting: While ensemble methods aim to reduce errors, they can also introduce overfitting, especially in Boosting. When Boosting focuses too much on hard-to-classify examples or outliers, it risks overfitting to the training data. Similarly, poorly tuned Stacking models can overfit if the meta-learner does not generalize well.

Hyperparameter Tuning: Ensemble models require careful tuning of hyperparameters. For example, Random Forest involves tuning parameters like the number of trees, while Boosting requires the selection of learning rates and maximum tree depths. Stacking can be even more complex since both base models and meta-learners must be tuned, often requiring substantial computational power and expertise.

Future Directions

Hybrid Approaches: The future of ensemble learning may see more hybrid approaches that combine elements of Bagging, Boosting, and Stacking. Hybrid methods aim to leverage the strengths of each technique while mitigating their individual weaknesses, leading to more robust and efficient models.

Efficient Boosting Techniques: Researchers are working on new Boosting techniques that are more efficient in terms of both computation and memory usage. For example, CatBoost, a relatively new boosting algorithm, is optimized for categorical data and is designed to reduce overfitting and computational costs.

Automated Model Selection: Automated machine learning (AutoML) platforms are likely to integrate ensemble methods more extensively. AutoML systems will be able to automatically choose between Bagging, Boosting, and Stacking based on the dataset and the problem type, further democratizing the use of these advanced techniques.

Explainability: As ensemble methods become more widely used, there is a growing need for explainable AI. Techniques are being developed to make the predictions of complex ensembles, such as Stacking or Boosting, more interpretable, especially in sensitive fields like healthcare and finance.

9. Conclusion

Ensemble learning has transformed the way we approach machine learning, offering significant improvements in accuracy, robustness, and model generalization. By combining multiple models through techniques like Bagging, Boosting, and Stacking, data scientists can reduce both bias and variance, creating more reliable predictions across a wide range of applications.

Bagging is ideal for reducing variance by training models in parallel and averaging their predictions, with Random Forest being one of its most popular applications.

Boosting excels at reducing bias by focusing on hard-to-classify examples and refining models through sequential learning. Algorithms like AdaBoost, Gradient Boosting, and XGBoost are widely used in both industry and academia.

Stacking combines different types of models to improve accuracy, making it a powerful tool for complex prediction tasks where no single model performs optimally on its own.

As ensemble learning continues to evolve, it will remain an essential tool in the data scientist's toolkit, driving advances in predictive modeling across industries like healthcare, finance, e-commerce, and beyond. Whether you're tackling imbalanced datasets, complex classification problems, or high-stakes predictive tasks, Bagging, Boosting, and Stacking offer versatile and powerful solutions.