Introduction

Artificial intelligence (AI) and machine learning (ML) are transforming industries globally, and as these technologies evolve, the need for transparency and interpretability in AI models is becoming increasingly critical.

As AI models get integrated into essential sectors like finance, healthcare, and even legal systems, companies are being held accountable for the decisions made by these systems. Explainable AI (XAI), which aims to make the decision-making process of AI systems transparent, is now an integral part of AI and ML development—and increasingly emphasized in explainable AI in ML interviews.

For aspiring machine learning engineers, the ability to work with and explain AI models is now a must-have skill, especially when interviewing with top-tier tech companies like Google, Amazon, and Facebook. In this blog, we’ll explore why Explainable AI is gaining traction in the ML interview landscape and provide concrete data points and examples of real interview experiences from candidates.

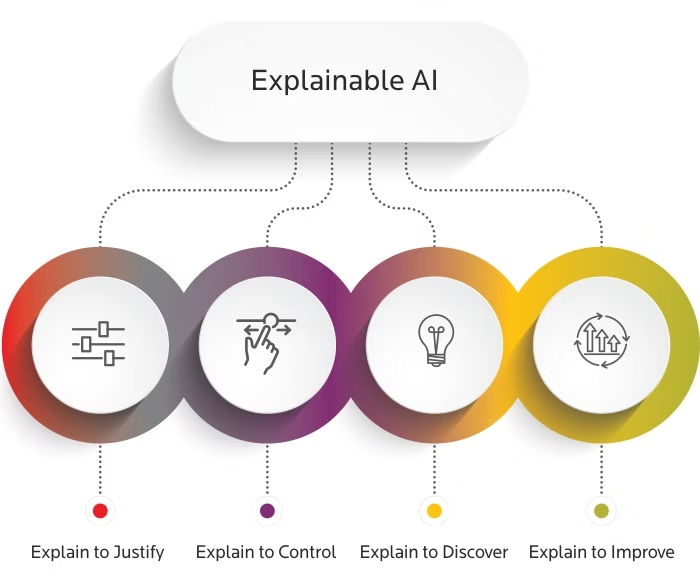

1. What is Explainable AI (XAI)?

Explainable AI (XAI) refers to methods and techniques designed to make the workings of machine learning models comprehensible to human users. Traditional AI systems, especially those based on deep learning, have often been criticized as "black boxes" because it’s difficult to explain how they arrive at specific decisions. XAI methods aim to clarify this by breaking down complex models, showing how different features influence predictions, and revealing any inherent biases.

At its core, XAI enables stakeholders—be they end-users, data scientists, or regulators—to understand, trust, and effectively use AI systems. This transparency is crucial in industries like healthcare, where the rationale behind a machine learning model's diagnosis can directly impact a patient’s treatment. Other key industries driving the demand for XAI include autonomous vehicles, financial services, and criminal justice, where biases in AI models can have severe consequences.

Moreover, tools like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapley Additive Explanations) allow developers to interpret black-box models by visualizing feature importance and explaining predictions in terms of input variables(Harvard Technology Review). These tools are increasingly being incorporated into real-world applications and are tested in interview scenarios for ML candidates.

2. The Growing Importance of XAI in Machine Learning

In the context of AI, the need for explainability is driven by both ethical considerations and regulatory requirements. For example, under the General Data Protection Regulation (GDPR) in the European Union, users have the right to an explanation when AI is used in decision-making that affects them significantly. This has placed XAI at the forefront of AI development, as companies must ensure compliance with such legal frameworks.

A 2024 industry report indicates that 60% of organizations using AI-driven decision-making systems will adopt XAI solutions to ensure fairness, transparency, and accountability.This need is particularly acute in sectors like finance, where models used for credit scoring must be interpretable to avoid discriminatory practices, and in healthcare, where doctors must understand AI-derived predictions before applying them in diagnoses or treatment plans.

Interviews at top companies often now include XAI-related questions. For instance, candidates applying to Facebook reported being asked to explain how they would handle model transparency when building recommendation systems for sensitive user data. Additionally, candidates are often tasked with implementing tools like SHAP during technical interviews to show how feature contributions can be visualized and communicated.

3. XAI and ML Interviews: What’s Changing?

The shift towards explainability in AI models has not gone unnoticed by the hiring managers at leading tech firms. In recent years, major companies such as Google, Microsoft, and Uber have integrated XAI-related questions into their machine learning interview processes. While the technical complexity of building models remains crucial, candidates are increasingly tested on their ability to explain model decisions and address fairness and bias issues.

For example, a former candidate interviewing for an ML role at Google mentioned that during the technical portion of their interview, they were asked to demonstrate the LIME tool on a pre-trained model. The interviewer specifically wanted to see how they would explain the impact of individual features to a non-technical audience.

Similarly, Amazon has placed a growing emphasis on ethical AI during its interviews. A candidate reported that their interviewer posed a scenario in which an AI system made biased hiring decisions. The challenge was to identify the bias and suggest ways to use XAI methods like counterfactual fairness to mitigate it. This reflects a broader trend where engineers are not only expected to optimize models for accuracy but also ensure those models are fair, transparent, and accountable.

4. Tools and Techniques for Explainability in AI

XAI is built around a range of tools and techniques that help demystify the black-box nature of many AI models. Some of the most widely used tools in industry—and the ones you’re most likely to encounter in interviews—include:

SHAP (Shapley Additive Explanations): SHAP values are grounded in game theory and offer a unified framework to explain individual predictions by distributing the "contribution" of each feature.

LIME (Local Interpretable Model-Agnostic Explanations): LIME works by perturbing input data and observing how changes in inputs affect the model’s output, providing a local approximation of the black-box model.

Partial Dependence Plots (PDPs): These plots show the relationship between a particular feature and the predicted outcome, helping to visualize the overall effect of that feature on model behavior.

What-If Tool (by TensorFlow): This allows users to simulate and visualize how changes in input data affect the output of an AI model in real-time, often used in fairness testing.

One candidate who interviewed at LinkedIn for a machine learning position was asked to compare SHAP and LIME during a technical interview. They were presented with a model and tasked with applying both tools to explain the feature importance of a complex decision-making process. The focus was on how effectively the candidate could communicate the insights from these tools to business stakeholders.

5. Why XAI Knowledge is a Competitive Advantage in Interviews

In today’s tech landscape, knowing how to build models is not enough. Hiring managers are increasingly looking for candidates who can address the "why" and "how" behind a model’s predictions. This is where explainability becomes a differentiator in competitive interviews.

A candidate with strong knowledge of XAI can not only deliver accurate models but also communicate their findings effectively to non-technical teams. For example, engineers working on AI-driven financial applications must be able to explain model decisions to both clients and internal auditors to ensure that decisions are unbiased and lawful.

According to a 2023 report by KPMG, 77% of AI leaders said that explainability would be critical for business adoption of AI technologies by 2025.As such, companies are prioritizing candidates who can bridge the gap between AI’s technical capabilities and its ethical use.

During interviews at Apple, for instance, candidates are often asked to design explainability strategies for hypothetical AI applications, such as AI-driven hiring or customer recommendations. One candidate recalled being asked how they would explain the decision-making process of a recommendation algorithm to a skeptical stakeholder who was unfamiliar with AI.

6. Preparing for XAI in ML Interviews

Preparing for XAI-focused interview questions requires a blend of technical expertise and communication skills. Here are actionable steps to take:

Master XAI Tools: Learn how to use open-source explainability tools like SHAP, LIME, and interpretML. Many companies expect candidates to be proficient in using these tools to explain their models.

Work on Real-World Projects: Practice applying XAI techniques in projects, such as building interpretable machine learning models or auditing a model for fairness.

Focus on Communication: Practice explaining AI decisions to non-technical audiences. Many XAI interview questions revolve around explaining models to business teams or clients.

Study Case Studies: Review real-world examples where XAI has been applied, such as in healthcare diagnosis, credit scoring, or fraud detection, to understand the impact of interpretability.

Several candidates have mentioned that resources like IBM’s AI Explainability 360 toolkit or Coursera courses on ethical AI helped them navigate XAI questions during interviews at firms like Netflix and Microsoft.

Conclusion

As the use of AI expands across industries, explainability has become more than a buzzword—it's a critical component of AI and machine learning development. The need for transparency, fairness, and accountability in AI systems is pushing companies to hire engineers who not only build powerful models but also understand how to explain and justify their decisions.

For candidates, knowledge of XAI is a competitive advantage that can set them apart in today’s job market. With the rise of AI regulations and ethical concerns, the future of AI is explainable, and those who master this field will be well-positioned to thrive in machine learning careers.