Section 1 - Why Multi-Agent Systems Are Becoming a Hot Interview Topic in 2025–2026

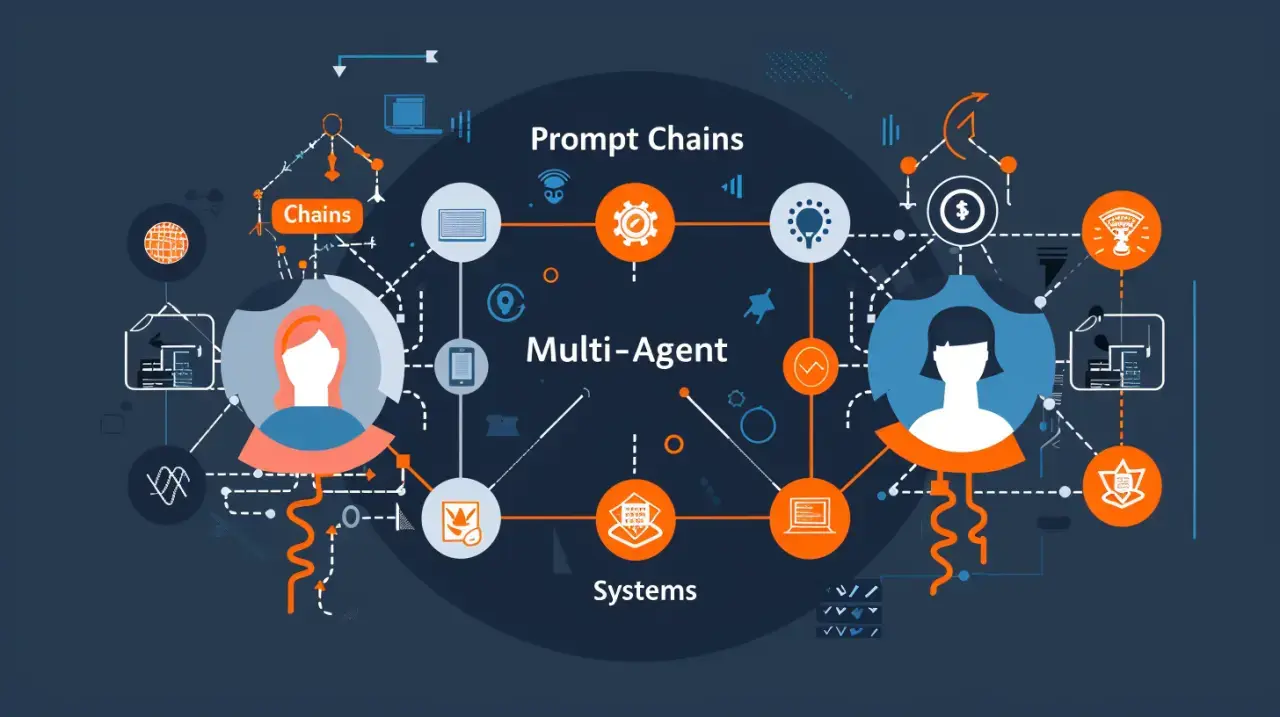

If 2023–2024 was the era of single-model LLM applications, 2025–2026 is the era of multi-agent systems.

Companies are moving beyond “one LLM solves one task” and shifting toward collaborative agent architectures where multiple specialized AI components coordinate to solve complex workflows:

- coding + debugging + testing workflows

- research agents + summarization agents + reasoning agents

- planning + acting + monitoring loops

- multimodal perception + task execution

- specialized “persona agents” that negotiate or critique each other

- supervisor / worker architectures

- tool-using agents and orchestrators

- search-augmented agents with recursive refinement

Because of this shift, multi-agent reasoning has become one of the most high-leverage skills an ML or LLM engineer can demonstrate in interviews.

Interviewers now ask things like:

- “How would you design a multi-agent orchestration for financial report analysis?”

- “What role should a critic agent play in a safety-sensitive system?”

- “How would you evaluate two agents arguing to reach consensus?”

- “Describe a workflow where agents supervise each other.”

- “What are the failure modes of multi-agent setups?”

- “When does orchestrating multiple agents actually help, and when is it unnecessary complexity?”

These are not just technical questions, they are systems thinking questions.

They test whether you understand how to build, evaluate, and debug behavioral ecosystems, not just individual prompts.

Check out Interview Node’s guide “The Rise of Agentic AI: What It Means for ML Engineers in Hiring”

Interviewers’ interest in multi-agent systems is driven by five major industry shifts. Let’s break them down.

a. LLMs Are Hitting Ceiling Effects - Agents Extend Their Capabilities

Single LLM prompts, even with chain-of-thought, have limits:

- long tasks exhaust context

- complex reasoning becomes inconsistent

- multi-step execution is unreliable

- tool use becomes fragile

- planning breaks under ambiguity

Companies realized agents can compensate for LLM limitations by distributing tasks between specialized components:

- Planner agent → breaks down the task

- Worker agents → execute specific components

- Critic agent → verifies and refines

- Supervisor agent → oversees the pipeline

- Tool agent → executes external functions

- Memory agent → stores state across steps

This shift parallels how human teams operate, specialization increases capability.

Interviewers want to know if you can describe why and when agency beats prompting.

b. Real Products Require Persistence, Coordination, and Long-Horizon Tasks

Many real applications require processes that unfold over time:

- iterative research

- document synthesis

- code generation → testing → debugging → refactoring

- planning trips or logistics

- long investigations (legal, medical, financial)

- multi-turn user interactions

A single prompt cannot handle:

- dependency tracking

- multi-step consistency

- self-correction

- memory over long horizons

- reliable state transitions

Agents can.

This is why interviewers increasingly ask:

“How would you orchestrate agents to solve a long-horizon task like code generation or financial auditing?”

They are testing whether you can design temporal structures, not just static prompts.

c. The Industry Is Moving Toward Modular, Observability-Rich AI Pipelines

Multi-agent systems enable:

- debuggable steps

- isolation of failures

- behavioral analysis

- swap-in/out components

- structured observability

Instead of one giant prompt with hidden failure points, orchestration makes ML systems more modular and transparent.

Interviewers want to hear if you can explain:

- where logs should be collected

- how to monitor agent-to-agent messages

- how to diagnose agent drift

- how to visualize step-level transitions

This signals production-readiness and engineering maturity.

d. Orchestration Solves the “LLM Unreliability” Problem Through Redundancy

One of the biggest problems with LLMs is inconsistency.

Multi-agent setups allow:

- redundancy

- cross-verification

- consensus formation

- majority voting

- adversarial critique

- iterative refinement

Think:

- a researcher agent writes,

- a critic agent audits,

- a verifier agent grounds the output,

- a planner agent restructures it.

This reduces:

- hallucinations

- unsafe outputs

- low-quality reasoning

- brittle single-shot behavior

Interviewers increasingly ask:

“How would you design agents that check each other for hallucinations?”

Because they want engineers who understand reliability engineering.

e. Tool Use + Orchestration Is Becoming Standard in Production

Many LLMs now rely on:

- search APIs

- vector databases

- SQL agents

- Python execution

- scraping tools

- code interpreters

- graph-based evaluators

- document parsers

Multi-agent systems are the most natural way to manage tool-enabled pipelines.

Interviewers test whether you can describe:

- when an agent should call a tool

- how to handle failures

- how to enforce JSON responses

- how to synchronize state

- how to handle race conditions

- how to avoid infinite loops

This is true systems design, not prompting.

Why Interviewers Care About Multi-Agent Case Studies

When a candidate explains multi-agent systems well, they demonstrate:

1. Systems Thinking (Senior-Level Indicator)

Agents force you to think about coordination, not just modeling.

2. Evaluation Maturity

You must describe how to evaluate each agent and the pipeline as a whole.

3. Product Awareness

Agents exist to improve reliability, UX, and long-horizon usefulness.

4. Engineering Judgment

You must know when agents help and when they are over-engineering.

5. Communication Skills

Orchestration requires clear explanation, messy answers expose weak reasoning.

What Interviewers DON’T Want

They do not want:

- buzzwords

- frameworks without understanding

- “agents talk to each other” without purpose

- overcomplicated diagrams

- ungrounded technical jargon

They want clarity:

- “Here’s the agent.”

- “Here’s how it interacts.”

- “Here’s the failure mode.”

- “Here’s how we evaluated it.”

This clarity is rare, and highly valued.

Key Takeaway

Multi-agent systems are no longer niche research concepts.

They are quickly becoming the backbone of modern AI products.

This is why ML interviews now include questions about:

- orchestration patterns

- agent workflows

- reliability strategies

- coordination structures

- failure analysis

- evaluation loops

If you can speak about multi-agent systems with:

- structure,

- clarity,

- precision, and

- systems-level thinking,

…you immediately stand out from 90% of candidates.

Because multi-agent reasoning is not about knowing frameworks —

it’s about understanding how intelligence emerges from structured collaboration.

Section 2 - The Mental Models You Need to Explain Multi-Agent Systems Clearly in Interviews

How to think, structure, and articulate multi-agent reasoning so interviewers immediately see senior-level clarity

Most candidates fail multi-agent interview questions not because they lack technical knowledge, but because they lack the mental models to explain these systems clearly.

When an interviewer says:

- “How would you design a multi-agent orchestration for document analysis?”

- “Explain how agents coordinate in your workflow.”

- “What role does a critic or supervisor agent play?”

…candidates often respond by listing components:

“There’s a planner agent, a worker agent, a reviewer agent, and they talk to each other.”

This is description, not understanding.

Interviewers want to hear systems thinking, behavioral reasoning, and architectural clarity, not loose descriptions.

A strong candidate can describe multi-agent systems the same way a Staff engineer would describe distributed systems:

through mental models, not surface-level definitions.

Below are the six mental models that instantly transform your explanations from average to exceptional.

Check out Interview Node’s guide “Machine Learning System Design Interview: Crack the Code with InterviewNode”

a. The Agent = an Autonomous Function with Goals, Inputs, Outputs, and Failure Modes

The biggest shift is moving from thinking of an “agent” as a “chatbot” to seeing it as a unit of behavior with a purpose.

Every agent has:

- Goal: What it is optimizing for

- Inputs: What information it receives

- Outputs: What decisions or artifacts it produces

- Constraints: Time, context, tools, policies

- Failure Modes: How it can break or degrade

Interviewers listen for whether you think in this structure.

Example strong framing:

“Each agent is essentially a specialized policy, a function that transforms input states into improved states.

The planner transforms a vague task into structured subtasks.

The worker transforms subtasks into outputs.

The critic transforms outputs into validated or corrected outputs.”

This framing immediately signals maturity.

b. Multi-Agent Systems = State Transitions, Not Chat Between LLMs

Junior candidates think agents “talk” to each other like humans.

Senior candidates understand that multi-agent systems operate through state transitions.

State 0 → Planning agent → State 1 → Worker agent → State 2 → Critic agent → State 3 → User output

This clarity matters enormously in interviews because it shows you:

- understand determinism and structure

- recognize each agent as a transformer of state

- can debug transitions, not conversations

- can define orchestration as a sequence of transformations

Interviewers love hearing phrases like:

“Each agent updates the global task state in a controlled way.”

This shows you can build real systems, not just prompt multiple LLMs.

c. Orchestration = Control Flow, Not Communication Flow

Weak candidates focus on how agents “chat.”

Strong candidates focus on who decides what happens next, i.e., orchestration.

Great orchestration answers describe:

- Control logic → who decides the next step

- Triggers → what conditions cause transitions

- Stopping criteria → how the system knows it’s done

- Fallback mechanisms → what happens when an agent fails

Example strong explanation:

“The orchestrator determines when to call each agent, validates intermediate outputs, and ensures we don’t enter infinite loops. It’s essentially the brain, while agents are the muscles.”

Interviewers immediately recognize this as system design intuition.

d. Multi-Agent Behavior = Division of Cognitive Labor

The real power of multi-agent systems is specialization, not “more agents,” but right agents.

Interviewers want to hear how you think about specialization:

- planner vs. worker

- reasoner vs. executor

- critic vs. verifier

- memory agent vs. context provider

- actor vs. safety filter

A great analogy:

“Single-agent prompting is a generalist. Multi-agent systems mirror human teams, specialists collaborating to solve a complex task.”

You’ve now demonstrated conceptual depth instead of merely listing components.

e. Multi-Agent Limitations = Emergent Failure Modes

Strong candidates acknowledge that multi-agent systems create new challenges.

Interviewers specifically listen for your awareness of:

Failure Mode 1 - Feedback Loops

Agents reintroducing incorrect information.

Failure Mode 2 - Over-Delegation

An agent assumes another agent will fix the problem.

Failure Mode 3 - Context Poisoning

Incorrect outputs polluting shared state.

Failure Mode 4 - Looping / Deadlocks

Agents repeatedly refining without convergence.

Failure Mode 5 - Error Amplification

A critic introduces new errors while fixing others.

Mentioning these creates instant credibility.

Example strong answer:

“Agents improve capability, but they also create emergent failure modes. For example, a critic agent can amplify errors if it misinterprets the worker output. So evaluation must track not just final outputs but per-agent contributions.”

This level of nuance is exactly what senior-level interviewers want.

f. Evaluation = Measuring Agent Quality, Pipeline Quality, and Collaboration Quality

Junior candidates only evaluate final outputs.

Senior-level candidates evaluate:

- Agent performance individually

- Pipeline-wide performance

- Interaction quality between agents

Interviewers LOVE when candidates talk about:

- per-agent success rates

- failure propagation analysis

- step-level correctness

- chain-level reasoning quality

- agent-to-agent message integrity

- robustness under different contexts

This shows you understand that multi-agent systems are dynamic ecosystems with evolving behavior.

Example strong framing:

“We evaluate not only the final answer but also stepwise agent correctness, critic accuracy, and orchestration reliability.”

This signals deep maturity.

How These Mental Models Change Your Interview Answers

Without these mental models, your explanations sound like:

“Agents help each other solve tasks. One agent plans, one executes, one checks.”

With these mental models, your answers sound like:

“An agent is a goal-driven policy with defined inputs, outputs, and failure modes.

Multi-agent systems operate through controlled state transitions.

Orchestration defines control flow, not communication.

Specialization divides cognitive labor for long-horizon reliability.

We must evaluate individual agent behavior and multi-step pipeline behavior to avoid propagation failures.”

One answer sounds junior.

The other sounds like a Staff ML/LLM engineer.

Key Takeaway

Interviewers aren’t testing whether you know agent frameworks.

They’re testing whether you can think in terms of:

- goals

- state transitions

- orchestration patterns

- failure analysis

- behavioral evaluation

- systems-level reasoning

If you master these six mental models, you will be able to talk about multi-agent systems in a way that is:

- structured

- confident

- product-aware

- technically rigorous

- and instantly recognizable as senior-level thinking

Because multi-agent systems are not about hype —

they are about orchestrating intelligence.

Section 3 - The 7 Multi-Agent Architectures Every Candidate Should Know (and How to Explain Them in Interviews)

Clear, senior-level ways to describe the most common agent orchestration patterns interviewers expect in 2025–2026

Most ML candidates walk into interviews with a vague idea of “multi-agent systems,” imagining something like:

“Different LLMs talk to each other to solve tasks.”

That’s not an architecture.

That’s a vibe.

Senior interviewers want you to speak about agentic systems with the clarity of someone who understands workflow design, Reliability engineering, LLM limitations, and system-level coordination.

This section covers the seven architectures that have become standard patterns in modern AI products, along with the exact language you should use to explain them in ML interviews.

Check out Interview Node’s guide “Scalable ML Systems for Senior Engineers – InterviewNode”

Let’s dive in.

a. Planner → Worker Architecture

The most fundamental multi-agent pattern used across every major AI company

This pattern is the backbone of most agent systems in 2025–2026.

Planner Agent

- Breaks down a complex task into structured steps

- Acts like a project manager

- Defines scope, requirements, goals

Worker Agent(s)

- Execute the subtasks independently

- Produce artifacts (code, summaries, plans, answers)

- Can operate sequentially or in parallel

Why interviewers love it

It indicates you understand task decomposition, the #1 capability LLMs struggle with.

How to explain it in interviews

“We use a planner agent to handle task decomposition and one or more worker agents to execute subtasks.

The orchestrator manages transitions between planning and execution, ensuring step-level consistency and preventing drift.”

Best use cases

- code generation

- document processing

- research workflows

- long-horizon reasoning

b. Worker → Critic Architecture (Producer–Verifier Loop)

Reliability-focused pattern used in safety-sensitive applications

This is a two-agent feedback loop:

Worker Agent

- Produces the initial output

Critic Agent

- Evaluates correctness, safety, coherence, grounding

- Suggests improvements

- Flags hallucinations or unsafe content

Why interviewers love it

It demonstrates knowledge of reliability, guardrails, and evaluation loops.

How to explain it

“The worker creates an output, and the critic evaluates it using rubric-based criteria.

The critic can either refine the output directly or send structured feedback for the worker to iterate.”

Best use cases

- hallucination reduction

- legal or financial summaries

- safety-sensitive LLM tasks

- fact-checking tools

c. Supervisor → Sub-Agents Architecture

Used for complex orchestrations where agents must follow rules

This pattern introduces hierarchical control:

Supervisor Agent

- Enforces global policies

- Monitors agent messages

- Ensures workflow correctness

- Prevents infinite loops

- Guarantees safety and compliance

Sub-Agents

- Specialized workers

- Execute tasks within constraints

Why interviewers love it

It reveals your ability to describe hierarchical systems, just like distributed computing.

How to explain it

“The supervisor enforces step validity, safety constraints, and termination conditions while delegating specialized tasks to sub-agents.”

Best use cases

- enterprise assistants

- compliance-heavy domains

- medical and finance AI

- multi-tool workflows

d. Debate / Adversarial Agents (Agent-vs.-Agent Reasoning)

Two or more agents argue to reach a better answer

This emergent pattern mirrors human debate:

Agent A

- Makes an argument or solution

Agent B

- Challenges weaknesses

- Provides counterarguments

- Forces refinement

Optionally:

Arbiter Agent

- Evaluates which argument is stronger

Why interviewers love it

It demonstrates your understanding of reasoning enhancement and self-correction mechanisms.

How to explain it

“Two agents generate competing solutions, critique each other’s reasoning, and converge through adversarial refinement.

This reduces hallucinations but must be monitored, as debates can amplify incorrect logic.”

Best use cases

- multi-step reasoning

- hypothesis testing

- safety discussions

- creative ideation

e. RAG-Enhanced Multi-Agent Architecture

Agents + retrieval + tools = the backbone of modern production AI

This architecture combines:

- retrieval agent

- ranking agent

- summarization agent

- grounding agent

- output-generation agent

Why interviewers love it

It shows you understand how agent systems integrate with data pipelines, not just LLMs.

How to explain it

“A retrieval agent fetches context, a ranking agent selects the most relevant chunks, and a generator agent produces the answer grounded in that evidence.

A critic agent may verify factual accuracy.”

Best use cases

- enterprise knowledge assistants

- legal document systems

- research automation

- customer service bots

f. Tool-Using Agents (Function-Calling Orchestrations)

Agents that know when to call APIs, tools, databases, or code interpreters

This architecture involves:

- an agent deciding whether to use a tool

- validating tool input/output

- updating the global state

- preventing misuse or infinite loops

Why interviewers love it

It reveals engineering maturity, tool integration is production work.

How to explain it

“The agent decides whether to use a function, generates structured arguments, and validates tool responses before updating the state.

The orchestrator ensures safety and prevents runaway tool calls.”

Best use cases

- SQL agents

- coding agents

- browser automation

- multimodal pipelines

g. Memory-Based Multi-Agent Architecture (Persistent Agents)

Agents that maintain short-term and long-term memory states

Memory agents enable:

- consistent persona adherence

- long-horizon task tracking

- conversation continuity

- knowledge accumulation

- avoidance of repeated mistakes

Why interviewers love it

It shows you understand persistent state, a key challenge in agent systems.

How to explain it

“A memory agent stores structured task state, intermediate outputs, decisions, and references.

All agents read and write to this memory, enabling persistent workflows that span multiple turns.”

Best use cases

- personal assistants

- multi-session agents

- long-document synthesis

- codebase refactoring agents

How to Decide Which Architecture to Describe in Interviews

Interviewers evaluate:

- clarity

- simplicity

- correctness

- reasoning

- tradeoff awareness

Choose architectures that:

- match the problem

- minimize unnecessary complexity

- maximize reliability

- showcase your systems-thinking

Example strong framing:

“Given this task, the planner → worker → critic pattern is ideal because it enables decomposition, execution, and verification while keeping the system modular and debuggable.”

You don’t just describe the architecture —

you justify it.

Key Takeaway

Knowing multi-agent systems is useful.

Being able to explain them clearly is what gets you hired.

Use these seven architectures to demonstrate:

- deep understanding,

- strong communication,

- system design maturity,

- and practical reasoning.

Because interviewers aren’t impressed by buzzwords —

they are impressed by structured, thoughtful explanations grounded in real-world reliability.

Section 4 - How to Present Multi-Agent Projects in Interviews (The ORBIT Storytelling Framework)

A structural tool to explain orchestrations with clarity, confidence, and senior-level reasoning

Multi-agent systems are powerful, but dangerously easy to explain poorly.

Many candidates fail interviews simply because their explanations sound like:

- “We had a bunch of agents working together.”

- “The agents talked to each other.”

- “One agent planned, one did the work.”

- “We used a loop until we got a good answer.”

From an interviewer’s perspective, this signals:

- weak technical depth,

- unclear understanding of orchestration,

- lack of system-level reasoning,

- and an inability to communicate complexity cleanly.

To stand out, you need a storytelling framework that transforms multi-agent chaos into clarity.

A structure that makes your design choices legible.

A narrative arc that demonstrates ownership, judgment, and maturity.

For this, I built the ORBIT Framework, optimized specifically for ML/LLM interviews.

The ORBIT Framework

A 5-step method for explaining ANY multi-agent project:

- O - Objective

- R - Roles & Responsibilities

- **B - Behavior Flow (Control & State)

- I - Issues, Failures & Improvements

- T - Testing & Evaluation

Let’s break each piece down with examples, tips, and interview-ready phrasing.

Check out Interview Node’s guide “The AI Hiring Loop: How Companies Evaluate You Across Multiple Rounds”

O - Objective: Start with the Problem the Agents Were Built to Solve

Interviewers care more about the why than the architecture.

Start with:

- the user problem

- the product goal

- the constraint

- the required behavior

- the stakes

- the failure mode that needed solving

Weak candidates start with architecture.

Strong candidates start with purpose.

Example Strong Opening

“We built a multi-agent system to analyze 200-page financial filings and generate accurate risk summaries.

The main challenge was long-horizon reasoning and factual grounding, a single LLM couldn’t maintain consistency across such a long document.”

This sets the stage perfectly.

Avoid:

- “We used agents because agents are cool.”

Use:

- “Here’s the real-world reason agents were necessary.”

R - Roles & Responsibilities: Define Each Agent Like a Team Member

This is where most candidates fumble.

They describe agents generically:

“We had a planner agent, a worker agent, and a critic.”

Interviewers want to hear:

- what each agent optimizes for

- what inputs it takes

- what outputs it produces

- what constraints shape its behavior

- what failure modes it introduces

Describe each agent as if you were describing a team member.

Example Strong Framing

“The planner agent decomposed the filing into sections and created extraction tasks.

Worker agents handled extraction for each section.

A critic agent verified grounding and consistency.

A supervisor agent ensured no step drifted beyond our safety constraints.”

This is precise, structured, and demonstrates deep understanding.

B - Behavior Flow: Explain Control Flow and State Transitions

This is the MOST important section.

Multi-agent systems fail when:

- agents loop endlessly

- agents override each other

- state becomes polluted

- the pipeline loses coherence

Interviewers want to see whether you understand:

- control flow (who decides what happens next)

- state flow (how information moves between agents)

- termination logic

- safeguards

- fallbacks

- dependency order

Most candidates skip this, which is why average answers fail.

Example Strong Explanation

“The orchestrator triggered the planner first.

The planner wrote tasks into a shared memory store.

Worker agents picked up tasks asynchronously, updated state with extracted facts, and the critic reviewed each contribution.

The supervisor enforced constraints like maximum depth, prevented infinite loops, and terminated the process when all tasks were validated.”

This is the language of Staff-level engineering.

I - Issues, Failures & Improvements: Show You Can Debug Agent Behavior

The strongest part of ANY multi-agent case study is the failure analysis.

Interviewers want to hear:

- what went wrong

- why it went wrong

- what emergent behaviors you discovered

- how you debugged them

- what changes you made

- what you learned

Weak candidates hide failures.

Strong candidates highlight them, because that’s where engineering happens.

Example Strong Failure Narrative

“Initially, the worker agent polluted shared memory by adding unverified claims.

This caused the planner to make poor decisions downstream.

We fixed this by implementing a validation checkpoint: only critic-approved outputs could enter long-term memory.”

This shows:

- real engineering

- deep reasoning

- awareness of failure propagation

- understanding of robustness

It instantly elevates your credibility.

T - Testing & Evaluation: The Senior-Level Differentiator

Most candidates end with:

“And it worked well.”

Not acceptable.

Evaluation is EVERYTHING in agent systems because:

- outputs can vary

- agent interactions are stochastic

- small errors can amplify

- long-horizon tasks accumulate mistakes

Interviewers expect you to talk about:

Evaluation Dimensions

- step-level evaluation

- agent-level evaluation

- final-output evaluation

- robustness testing

- hallucination detection

- adversarial workflows

- long-context behavior

- performance vs. cost tradeoffs

Example Strong Conclusion

“We ran 200 multi-document tasks and measured worker accuracy, critic reliability, supervisor interventions, hallucination rates, and state consistency.

After improvements, hallucinations dropped 40%, and task completion time decreased by 30%.”

This communicates rigorous engineering, not anecdotal success.

Putting ORBIT Together - A Full Example

Here’s what ORBIT sounds like when assembled:

“Our objective was to summarize 200-page legal documents reliably.

We built four agents: a planner for decomposition, workers for extraction, a critic for verification, and a supervisor for safety.

The orchestrator managed control flow, memory states, and step sequencing.

We discovered early failures: the critic agent amplified mistakes, and the memory store became polluted. We fixed this with validation checkpoints and stricter state gating.

Evaluation included per-agent metrics, cross-agent consistency scoring, and a final hallucination audit. After refinements, we achieved 35% fewer inconsistencies and 50% faster completion.”

That sounds senior.

Not because of complexity, but because of structure.

Key Takeaway

ORBIT transforms messy agent stories into compelling, crystal-clear interview narratives.

It helps you communicate:

- purpose (O)

- structure (R)

- architecture (B)

- rigor (I)

- evaluation (T)

This is exactly what senior ML interviewers listen for.

Because real-world agent systems aren’t impressive because they’re “agentic.”

They’re impressive because they are:

- orchestrated

- observable

- debuggable

- reliable

- evaluated

- purposeful

ORBIT makes that obvious.

Conclusion - Turning Multi-Agent Understanding Into a Competitive Edge in ML Interviews

Multi-agent systems are no longer a research novelty, they are fast becoming the architecture behind the most advanced AI products of 2025–2026. Companies like OpenAI, Anthropic, Meta, Google DeepMind, and leading AI-first startups are shifting from single-LLM pipelines to coordinated agent ecosystems that can reason longer, execute more reliably, use tools intelligently, and adapt to complex user needs.

This evolution has transformed what interviewers look for.

They no longer care whether you memorized agent frameworks or can recite orchestration buzzwords.

They want to see whether you can:

- reason about division of cognitive labor,

- explain agents as goal-driven policies,

- describe state transitions and control flow,

- anticipate emergent failure modes,

- and evaluate agent performance with rigor.

This is why the candidates who shine in interviews do so not by being flashy, but by being clear.

Because clarity demonstrates competence.

If you use the mental models from Section 2 and the ORBIT Storytelling Framework from Section 4, you will be able to talk about multi-agent systems in a way that conveys:

- systems-level thinking,

- engineering maturity,

- reliability awareness,

- and the ability to solve long-horizon, multi-step problems with structure and intention.

Interviewers walk away thinking:

“This person can design and debug complex AI workflows.

We want them on our team.”

In a world where LLM capabilities are plateauing and orchestration is becoming the new frontier, your ability to articulate multi-agent reasoning is a real hiring differentiator.

Because companies aren’t just hiring people who can prompt models.

They’re hiring people who can coordinate intelligence.

And that can be you.

FAQs - Multi-Agent Systems & Orchestrations in ML Interviews

1. Do I need real multi-agent experience to answer these interview questions well?

Not necessarily.

Even small personal projects, if described using ORBIT, can demonstrate strong systems-thinking and reasoning maturity.

2. Are multi-agent systems required for every ML or LLM role?

No.

But the companies working on the hardest problems (OpenAI, Anthropic, AI agents startups) increasingly use them, making the skill highly desirable.

3. Will interviewers expect me to know agent frameworks like LangChain or AutoGen?

They care more about conceptual understanding than frameworks.

It’s fine to mention tools, but you should emphasize principles: orchestration, state management, failure modes, evaluation.

4. How do I avoid overcomplicating my answer when describing multi-agent systems?

Use ORBIT.

Start with the objective, define agent roles, describe the control flow, walk through failure analysis, and finish with evaluation.

Simple, structured, powerful.

5. What’s the most impressive thing I can talk about in a multi-agent case study?

A failure you discovered, and how you fixed it.

Debugging is more compelling to interviewers than success.

6. Should I mention emergent failure modes in my answers?

Yes.

Interviewers love when candidates mention drift, looping, state pollution, or error amplification.

It shows real-world awareness.

7. How deep should I go into evaluation metrics?

Deep enough to show rigor (step-level correctness, agent accuracy, grounding, consistency),

but not so deep that you lose the narrative.

Think: structured, not scattered.

8. What if I built a simple project—can it still impress interviewers?

Absolutely.

A small project explained clearly beats a large project explained chaotically.

Clarity = seniority.

9. How do I practice multi-agent explanation for interviews?

Take one project and rehearse it using ORBIT.

Then explain it out loud until the flow feels natural.

Interviewers care about communication, not just engineering.

10. What’s the strongest sentence I can say in an interview to signal multi-agent expertise?

“Let me walk you through the control flow and state transitions, that’s where most agent systems succeed or fail.”

Saying this instantly signals Staff-level systems thinking.