SECTION 1: Why Real-Time Debugging Interviews Exist (and Why They’re So Effective)

Real-time debugging interviews are one of the most misunderstood, and most feared, interview formats in modern software hiring. Candidates often describe them as “unfair,” “random,” or “stress tests.” Interviewers, on the other hand, increasingly view them as one of the cleanest signals of real engineering ability.

This disconnect exists because candidates and interviewers are optimizing for different things.

Candidates prepare to produce solutions.

Interviewers want to observe how engineers think when systems break.

The Problem with Traditional Coding Interviews

Traditional algorithmic interviews reward:

- Pattern recognition

- Speed under pressure

- Memorized techniques

But production engineering rarely looks like this.

In real jobs:

- Code already exists

- Bugs are ambiguous

- Reproduction is incomplete

- Failures are emergent, not isolated

At companies like Google and Meta, debugging ability correlates more strongly with on-the-job impact than algorithmic brilliance. Engineers who debug well:

- Resolve incidents faster

- Prevent repeat failures

- Make safer changes

- Mentor others more effectively

Real-time debugging interviews were introduced to surface exactly these traits.

What Interviewers Are Actually Testing

Despite the name, debugging interviews are not about:

- Finding the bug fastest

- Knowing obscure language quirks

- Impressing with tooling knowledge

They are about diagnostic reasoning.

Interviewers observe:

- How you narrow the problem space

- Whether you form and test hypotheses

- How you handle incomplete information

- How you communicate uncertainty

A candidate who never finds the root cause can still pass, if their reasoning is sound.

Why Debugging Is a High-Signal Interview Format

Debugging interviews compress weeks of real engineering behavior into 45–60 minutes.

They expose:

- How you react when something doesn’t work

- Whether you jump to conclusions

- Whether you can stay structured under pressure

- How you collaborate when stuck

This is why many companies now include debugging rounds for mid-level and senior engineers, even when they already have strong coding rounds.

This evaluation philosophy overlaps strongly with what’s described in The Hidden Metrics: How Interviewers Evaluate ML Thinking, Not Just Code, which explains why interviewers prioritize reasoning quality over surface-level correctness.

Why Candidates Feel These Interviews Are “Unfair”

Candidates often struggle because debugging interviews violate common prep instincts:

- There is no clean problem statement

- The interviewer doesn’t guide you

- Silence feels dangerous

- Progress feels invisible

From the candidate’s perspective:

“I don’t know what they want.”

From the interviewer’s perspective:

“I want to see how you operate without instructions.”

This mismatch creates anxiety, but also signal.

Debugging Interviews Reflect Real Production Constraints

In real systems:

- Logs are noisy

- Metrics conflict

- Repro steps are unreliable

- The root cause is rarely where the error appears

Debugging interviews intentionally simulate this messiness.

According to engineering productivity research published by the USENIX Association, effective debugging is less about tools and more about hypothesis-driven investigation and feedback loops. This insight heavily influences how modern debugging interviews are designed.

What This Means for Your Preparation

If you approach debugging interviews like coding puzzles, you will underperform.

Success requires:

- Structured thinking

- Calm narration

- Comfort with uncertainty

- Willingness to revise assumptions

In the next section, we’ll break down how interviewers design debugging scenarios, what stages they expect you to move through, and where most candidates unintentionally fail, even when they’re technically strong.

Section 1 Takeaways

- Debugging interviews test diagnostic reasoning, not speed

- Interviewers care more about process than outcome

- Ambiguity is intentional, not a flaw

- These rounds strongly predict real on-the-job performance

SECTION 2: How Real-Time Debugging Interviews Are Structured (and How Signal Is Extracted)

Real-time debugging interviews may feel improvised, but they are usually highly structured beneath the surface. Interviewers are not waiting for you to stumble into the correct fix. They are watching how you move through a sequence of diagnostic stages, and where your reasoning breaks down.

Understanding this structure is one of the fastest ways to improve performance.

The Four Phases of a Debugging Interview

Most real-time debugging interviews implicitly follow the same four-phase arc, regardless of language, stack, or problem domain.

1. Problem Orientation

This phase begins the moment the interviewer presents a failing system, test, or snippet of code.

Interviewers observe:

- Do you restate the problem in your own words?

- Do you clarify expected vs. actual behavior?

- Do you ask about scope before touching the code?

Strong candidates resist the urge to dive in. They spend the first few minutes building a shared mental model.

Weak candidates start editing immediately.

2. Hypothesis Formation

Once the problem is understood, interviewers look for structured hypothesis generation:

- “This could be a data issue”

- “This might be a race condition”

- “This looks environment-specific”

What matters is not whether your first hypothesis is correct, but whether it is testable and bounded.

At companies like Amazon and Stripe, interviewers are explicitly trained to watch for candidates who enumerate possible failure modes before drilling into details. This mirrors how effective on-call engineers operate under pressure.

3. Evidence Gathering

This is where many candidates unintentionally fail.

Interviewers expect you to:

- Inspect logs or outputs selectively

- Use print statements or assertions intentionally

- Explain why each check is useful

Random poking is a red flag. Every action should be tied to a hypothesis.

This behavior maps closely to what’s discussed in From Model to Product: How to Discuss End-to-End ML Pipelines in Interviews, which highlights how interviewers evaluate ownership through systematic investigation rather than trial-and-error.

4. Decision and Communication

Even if you don’t fully resolve the bug, interviewers care deeply about:

- How you summarize findings

- Whether you can explain the most likely root cause

- What next steps you would take with more time

Clear synthesis at the end often outweighs incomplete execution.

How Interviewers Score You (Even When You’re “Stuck”)

Interviewers rarely score debugging interviews on binary outcomes. Instead, they assess along multiple dimensions:

- Search Strategy

Are you narrowing the problem space logically? - Signal-to-Noise Ratio

Do your actions reduce uncertainty, or add chaos? - Assumption Management

Do you validate assumptions or treat them as facts? - Composure

Can you stay calm and structured when progress is slow?

A candidate who methodically explores the wrong hypothesis can outperform a candidate who accidentally finds the fix with no explanation.

Why Silence Is Interpreted Negatively

One of the biggest surprises for candidates is how damaging silence can be.

From the interviewer’s perspective:

- Silence = unobservable reasoning

- Unobservable reasoning = no signal

Interviewers cannot credit thinking they cannot see.

This is why narration is not optional in debugging interviews, it is the interface through which your competence is evaluated.

The Most Common Structural Failure Modes

Strong engineers still fail debugging interviews due to predictable mistakes:

- Premature Fixation

Locking onto a single hypothesis and ignoring contradictory evidence. - Tool Worship

Reaching for debuggers, profilers, or frameworks without explaining intent. - Local Optimization

Fixing symptoms instead of identifying root causes. - Poor Closure

Ending the session without summarizing what was learned.

These are not skill gaps. They are process gaps.

Why Interviewers Interrupt You Mid-Debug

When interviewers interrupt, it’s rarely to help you. It’s to change the constraint:

- “Assume logs are unavailable”

- “Assume this only fails in production”

- “Assume you can’t reproduce locally”

They want to see whether your process survives disruption.

According to incident response research published by the SREcon, expert engineers outperform peers not by knowing more fixes, but by maintaining structure when information degrades. This directly informs how debugging interviews are escalated.

Reframing the Interview in Your Head

If you treat debugging interviews as:

- A puzzle to solve → you’ll rush

- A performance to impress → you’ll overcomplicate

If you treat them as:

- A diagnostic walkthrough → you’ll slow down

- A collaborative investigation → you’ll surface signal

This mental shift alone often improves outcomes dramatically.

Section 2 Takeaways

- Debugging interviews follow a predictable four-phase structure

- Interviewers score process, not just outcomes

- Narration is mandatory for signal extraction

- Adaptability matters more than correctness

SECTION 3: What “Good Debugging” Looks Like to Interviewers (and What Gets You Rejected)

In real-time debugging interviews, interviewers are not asking themselves, “Did this candidate find the bug?”

They are asking a more predictive question:

Would I trust this engineer to debug a production incident at 2 a.m.?

The difference between candidates who pass and those who fail often has little to do with technical depth and everything to do with how debugging behavior is expressed under pressure.

The Mental Model Interviewers Use

Interviewers implicitly classify candidates into three categories:

- Random Explorers

Try many things quickly with no clear direction. - Local Fixers

Identify symptoms and patch them without understanding root cause. - Systematic Diagnosticians

Narrow uncertainty step by step, using evidence.

Only the third group consistently passes.

At companies like Microsoft and LinkedIn, interview rubrics explicitly reward structured investigation over speed. Interviewers are trained to penalize “lucky fixes” that cannot be explained.

What Strong Debugging Behavior Looks Like

Strong candidates exhibit a consistent set of behaviors that interviewers recognize immediately.

1. They Anchor on Expected Behavior

Before touching the code, they clarify:

- What should happen?

- What actually happens?

- Under what conditions does it fail?

This prevents wasted exploration.

2. They Make Hypotheses Explicit

They say things like:

- “One possibility is…”

- “Another explanation could be…”

This allows interviewers to follow, and evaluate, their reasoning.

3. They Reduce the Problem Space Intentionally

Every action has a purpose:

- Print statements target a specific variable

- Tests isolate a specific path

- Logs confirm or eliminate hypotheses

This mirrors real-world incident response.

4. They Revise Beliefs When Evidence Changes

Strong candidates abandon incorrect hypotheses quickly and without ego.

This is a major positive signal.

What Gets Candidates Rejected (Even When They’re Smart)

Many technically strong engineers fail debugging interviews due to behavioral anti-patterns.

Premature Certainty

Declaring a root cause too early and defending it despite contradictory evidence.

Tool-Driven Debugging

Running debuggers or linters without explaining what insight they expect to gain.

Silent Struggle

Thinking internally without narration, leaving the interviewer blind.

Over-Optimization

Trying to make the code “better” instead of understanding why it’s broken.

These patterns suggest risk in production environments.

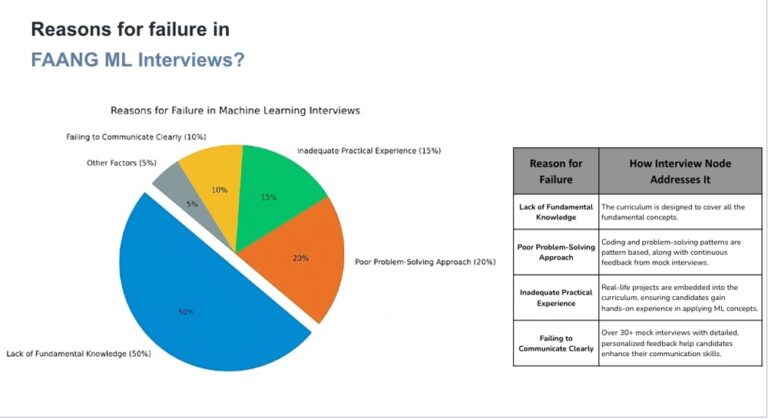

This distinction between surface competence and real engineering maturity is explored further in Why Software Engineers Keep Failing FAANG Interviews, which breaks down how interviewers interpret debugging behavior as a proxy for on-the-job reliability.

Why “Getting Stuck” Is Not a Failure

One of the most counterintuitive truths about debugging interviews is that getting stuck is expected.

Interviewers are not surprised when progress slows. What they watch is:

- Do you stay structured?

- Do you articulate uncertainty?

- Do you propose reasonable next steps?

A candidate who says:

“I’m not fully certain yet, but here’s the most likely cause and what I’d check next.”

often scores higher than one who silently flails.

The Role of Communication in Debugging Signal

Communication is not a “soft skill” here. It is the medium through which technical skill is evaluated.

Interviewers listen for:

- Clear problem statements

- Logical sequencing

- Calm tone under pressure

- Willingness to collaborate

A debugging interview is effectively a pair-debugging simulation.

How Interviewers Differentiate Junior vs. Senior Debuggers

Senior candidates are not expected to debug faster. They are expected to:

- Identify high-leverage checks earlier

- Avoid unnecessary complexity

- Articulate tradeoffs and risks

Juniors often dive into details. Seniors step back and ask:

“What’s the simplest explanation that fits the evidence?”

This difference is subtle but powerful.

According to engineering effectiveness research summarized by the IEEE Computer Society, expert debuggers outperform peers primarily through hypothesis management, not technical tricks. This directly informs how interviewers evaluate debugging rounds.

How to Self-Evaluate Your Debugging Readiness

Ask yourself:

- Do I narrate my reasoning naturally?

- Do my actions always test a hypothesis?

- Can I summarize what I’ve learned at any point?

If the answer to any of these is no, that’s where to focus practice.

Section 3 Takeaways

- Interviewers evaluate diagnostic behavior, not bug-finding speed

- Structured hypothesis-driven debugging is the strongest signal

- Silence and premature certainty are major red flags

- Getting stuck is acceptable, losing structure is not

SECTION 4: How to Practice Real-Time Debugging Interviews Effectively

Practicing for real-time debugging interviews is fundamentally different from practicing coding or system design. You are not training recall or synthesis, you are training diagnostic behavior under uncertainty. Most candidates practice the wrong way, which is why even experienced engineers are often surprised by poor performance in these rounds.

Effective practice is less about volume and more about how you simulate failure.

Why Traditional Practice Methods Don’t Work

Many candidates attempt to prepare by:

- Solving more coding problems

- Reading debugging tips

- Watching others debug

These activities build awareness, not skill.

Debugging interviews require procedural fluency:

- Forming hypotheses quickly

- Testing them efficiently

- Communicating reasoning clearly

- Recovering when progress stalls

These skills only improve through deliberate simulation, not passive exposure.

Step 1: Practice With Broken, Not Clean, Code

Most coding practice involves starting from a blank slate. Debugging interviews start with existing systems that almost work.

Good practice materials include:

- Failing unit tests

- Intermittent bugs

- Code with misleading comments

- Logs that partially contradict behavior

The goal is not to fix quickly, but to practice narrowing uncertainty.

This style of preparation aligns closely with Cracking ML Take-Home Assignments: Real Examples and Best Practices, which emphasizes structured investigation over brute-force iteration.

Step 2: Force Yourself to Narrate (Even When Alone)

Narration feels unnatural at first, but it is essential.

When practicing:

- Speak out loud

- Explain every hypothesis

- State why you are checking something

- Summarize findings periodically

If you cannot explain your reasoning coherently when alone, you will not do it under interview pressure.

Recording yourself and listening back is surprisingly effective.

Step 3: Practice Constraint Injection

Real interviews rarely let you debug in ideal conditions.

During practice, deliberately introduce constraints:

- “Assume logs are incomplete”

- “Assume you can’t run the code”

- “Assume the bug only occurs in production”

Then practice adapting your strategy without restarting from scratch.

This trains resilience and flexibility, two major evaluation signals.

Step 4: Use Pair Debugging Simulations

If possible, practice with another engineer:

- One plays the interviewer

- One plays the candidate

The “interviewer” should:

- Withhold information

- Push back on assumptions

- Change constraints mid-way

The goal is not to help, it’s to simulate realistic friction.

Step 5: Focus on Closure, Not Perfection

Many candidates lose points in the final minutes.

Practice ending every session with:

- A summary of what you know

- The most likely root cause

- Clear next steps

Interviewers often remember the ending more than the middle.

Step 6: Build a Debugging Playbook

Over time, develop a personal checklist:

- Clarify expected behavior

- Reproduce or isolate

- Enumerate hypotheses

- Test the highest-leverage one

- Reassess

This is not a script, it’s a safety net.

What Not to Over-Optimize

Avoid spending time memorizing:

- Language-specific edge cases

- Debugger shortcuts

- Framework internals

Interviewers care about reasoning, not tricks.

According to developer productivity research published by the Google SRE, the strongest engineers rely on structured investigation far more than specialized tools when diagnosing failures.

Measuring Progress the Right Way

You’re improving when:

- You feel calmer when stuck

- Your hypotheses become narrower

- Your narration becomes clearer

- You can summarize at any moment

Speed is a lagging indicator.

Section 4 Takeaways

- Debugging skill improves through simulation, not repetition

- Narration is a skill that must be practiced explicitly

- Constraint injection builds adaptability

- Clean closure often outweighs incomplete fixes

What to Do During the Interview: Real-Time Tactics That Improve Outcomes

By the time a real-time debugging interview begins, the interviewer is no longer asking, “Does this candidate know enough?”

They are asking something more consequential:

Can I trust this engineer’s judgment when the system is failing and time is limited?

This section focuses on in-the-moment execution, the behaviors that turn an average debugging performance into a strong hire signal, even when the bug itself is not fully resolved.

1. Open by Stabilizing Ambiguity (This Is Not Optional)

The first 3–5 minutes are disproportionately important. Interviewers form an initial confidence judgment here, and everything after is interpreted through that lens.

Strong candidates immediately establish:

- Expected behavior (what the system should do)

- Observed behavior (what it’s actually doing)

- Scope (what’s definitely broken vs. unknown)

- Constraints (environment, time, access)

This is not filler, it’s risk management.

When you restate the problem clearly, you signal:

- Systems thinking

- Ownership

- Reduced cognitive load under pressure

Interviewers subconsciously relax when ambiguity is stabilized. Weak openings force interviewers to carry the mental load themselves, and that negatively biases the rest of the session.

2. Narration as an Engineering Interface (Not Commentary)

Narration is not about talking more, it’s about exposing your decision graph.

Effective narration has three elements:

- Hypothesis – what might be wrong

- Intent – why you’re checking something

- Outcome – what the result means

Example of weak narration:

“Let me check this file real quick.”

Example of strong narration:

“If this variable is null here, it would explain the failure path, so I’ll check where it’s initialized.”

This structure allows interviewers to score you even if:

- You choose the wrong hypothesis

- The bug is non-obvious

- Time runs out

This is the same principle discussed in How to Think Aloud in ML Interviews: The Secret to Impressing Every Interviewer , interviewers cannot evaluate reasoning they cannot see.

3. Optimize for Leverage, Not Motion

Interviewers are allergic to random motion.

Every action you take should answer one of these questions:

- Does this eliminate an entire class of causes?

- Does this meaningfully reduce uncertainty?

- Does this inform the next decision regardless of outcome?

High-leverage checks include:

- Verifying assumptions at boundaries

- Inspecting inputs/outputs at system seams

- Validating invariants (“this should never be null”)

Low-leverage actions include:

- Refactoring early

- Skimming unrelated files

- Adding print statements without a hypothesis

A slow but focused candidate consistently outperforms a fast but noisy one.

4. Treat Pushback as a Promotion, Not a Threat

When an interviewer injects constraints,

“Assume logs are unavailable.”

“Assume this only fails in production.”

, they are not trying to trap you. They are escalating the signal level.

Strong response pattern:

- Acknowledge the new constraint

- Re-evaluate hypotheses

- Choose the next best probe

Weak response pattern:

- Defensiveness

- Repeating the same approach

- Arguing the constraint is unrealistic

From the interviewer’s perspective, adaptability under degraded conditions is one of the strongest predictors of real-world effectiveness.

5. Getting Stuck Is Normal, Losing Structure Is Not

Every strong engineer gets stuck. Interviewers expect it.

What they are watching for is how you behave when progress slows:

- Do you pause and reframe?

- Do you summarize what you know?

- Do you identify the highest remaining uncertainty?

A high-signal recovery sounds like:

“Given what we’ve ruled out, the most likely remaining cause is X. If I had more time, I’d validate it by checking Y.”

This demonstrates:

- Situational awareness

- Control

- Prioritization

Interviewers consistently score recovery behavior more heavily than uninterrupted progress.

6. Synthesis Is the Final Hiring Signal

The last 3–5 minutes matter more than most candidates realize.

Always close with:

- A most likely root cause (with confidence level)

- The evidence supporting it

- Next steps if more time were available

This mirrors real incident response. In production, clarity and next actions matter more than heroics.

Postmortem practices emphasized by Google SRE explicitly value hypothesis clarity and forward action over perfect resolution, this philosophy strongly influences debugging interview evaluation.

7. The Mental Frame That Consistently Wins

The best mental model is not:

- “I need to fix this”

- “I need to impress”

It is:

“I am walking another engineer through my investigation.”

This reframing:

- Reduces anxiety

- Improves narration

- Encourages collaboration

- Surfaces senior-level signal

Interviewers hire engineers they would want next to them during an incident, not those who panic silently or thrash noisily.

Conclusion: Why Real-Time Debugging Interviews Are the Truest Signal of Engineering Maturity

Real-time debugging interviews exist for a simple reason: they compress real engineering reality into a short, observable window. Unlike algorithmic puzzles or design hypotheticals, debugging forces candidates to confront ambiguity, partial information, and pressure, the same conditions that define high-impact engineering work in production.

From an interviewer’s perspective, these rounds are not about cleverness or speed. They are about trust. Can this engineer be relied upon when systems fail, signals are noisy, and time is limited? Can they reason clearly, communicate effectively, and adapt when their initial assumptions break down?

Throughout this blog, one theme has surfaced repeatedly: process beats outcome. Candidates who approach debugging interviews as puzzles to be solved often fail, even when they find the bug. Candidates who approach them as investigations to be managed often pass, even when they don’t. This is not a paradox. It reflects how engineering work actually functions at scale.

Strong debugging performance demonstrates:

- Structured thinking under uncertainty

- Hypothesis-driven investigation

- Calm adaptation to new constraints

- Clear communication and synthesis

- Ownership without ego

These signals are far more predictive of long-term success than isolated technical brilliance.

It’s also important to recognize that real-time debugging interviews are not designed to be adversarial. They are simulations of collaboration. Interviewers are not trying to watch you struggle, they are trying to see how you would operate as a teammate during an incident or a critical failure. Silence, defensiveness, or random action makes collaboration impossible. Clear narration, structured reasoning, and thoughtful prioritization make it easy.

For candidates, the most important mindset shift is this:

You are not being evaluated on whether you “win.”

You are being evaluated on whether you are safe, effective, and reliable.

That shift changes everything, from how you open the interview, to how you respond when stuck, to how you close without a perfect resolution.

Preparation, therefore, should not focus on memorizing edge cases or mastering tools. It should focus on building a repeatable diagnostic process:

- Clarify before acting

- Hypothesize before testing

- Test with intent

- Revise quickly when wrong

- Synthesize clearly at the end

Engineers who internalize this process don’t just perform better in interviews, they become stronger on-call responders, mentors, and technical leaders.

Finally, it’s worth noting that the rise of real-time debugging interviews reflects a broader trend in hiring. As software systems grow more complex and AI-assisted coding reduces the value of raw implementation speed, companies are prioritizing judgment, reasoning, and resilience. Debugging interviews are one of the clearest ways to surface those qualities.

If you can debug well in real time, not by guessing, but by thinking, you are demonstrating the kind of engineering maturity that top teams actively seek. And that is precisely why mastering this interview format is one of the highest-leverage investments you can make in your career.

Frequently Asked Questions (FAQs)

1) Do I have to find the bug to pass?

No. Interviewers score process, clarity, and adaptability. Many passes occur without a final fix.

2) Is it okay to ask questions during debugging?

Yes, asking the right questions early is a positive signal.

3) Should I use advanced debugging tools?

Only if you explain why. Tool-first behavior without intent is a red flag.

4) How much should I talk?

Enough to make reasoning observable. Aim for concise hypotheses and outcomes.

5) What if I pick the wrong hypothesis?

That’s fine, abandon it quickly when evidence contradicts it.

6) Are these interviews language-specific?

No. The signal is diagnostic reasoning, not syntax mastery.

7) How do interviewers differentiate senior candidates?

By leverage: faster narrowing, calmer recovery, clearer synthesis.

8) What’s the biggest mistake candidates make?

Silent struggle or premature certainty.

9) Can I refactor code during the interview?

Only after you’ve identified root cause. Refactoring early looks like guessing.

10) How should I handle time pressure?

Acknowledge it and prioritize. State what you won’t investigate and why.

11) Are debugging interviews replacing coding rounds?

They increasingly complement them, especially for mid/senior roles.

12) How should I practice the last five minutes?

Practice synthesis: evidence, likelihoods, and next steps.

13) What mindset helps most?

Treat it like a collaborative incident response, not a test.

14) How many mock sessions are enough?

Fewer, higher-quality simulations with feedback on narration and leverage.

15) What ultimately gets candidates hired?

Trust. Interviewers must trust your process under uncertainty.