1. Introduction to Reinforcement Learning and its Importance in Interviews

Reinforcement Learning (RL) has emerged as a groundbreaking approach within machine learning, gaining prominence for its ability to solve complex decision-making problems. From achieving superhuman performance in games like Go and Dota 2 to optimizing supply chain management in Fortune 500 companies, RL has proven its value across diverse industries. These examples highlight the growing importance of reinforcement learning in interviews for ML roles. This success has piqued the interest of leading tech firms, making RL a coveted skill in machine learning interviews.

Why Reinforcement Learning is Gaining Popularity in the Job Market

In the past few years, the demand for RL professionals has surged as companies strive to integrate more intelligent and adaptive systems into their operations. According to a 2023 report by LinkedIn, there has been a 40% year-over-year increase in job postings seeking RL expertise. Top-tier companies such as Google, Amazon, and OpenAI are on the lookout for candidates with RL skills, not only for research roles but also for positions focused on real-world applications.

The Role of Reinforcement Learning in Machine Learning Interviews

The growing relevance of RL in interviews is driven by the need for engineers who can think beyond standard supervised and unsupervised learning. Interviewers are not only looking for individuals who can implement algorithms but also those who understand the underlying mechanics of RL and can apply these concepts to new, unseen problems. RL problems in interviews typically fall into three categories:

Conceptual Understanding: Questions focusing on the candidate’s grasp of RL fundamentals such as policies, value functions, and reward signals.

Coding Exercises: Implementing RL algorithms, optimizing rewards, or solving small-scale RL environments.

Case Studies: Analyzing a real-world problem and determining how RL could provide a solution, often requiring discussion on model design, trade-offs, and potential pitfalls.

Interview Trends: Why Are Companies Focused on RL?

Tech companies are pushing the boundaries of AI with RL, creating systems that can autonomously learn and adapt over time. For instance, Google’s DeepMind has revolutionized areas such as protein folding and energy optimization using RL. Similarly, Uber has utilized RL to optimize its ride-sharing algorithms, significantly improving efficiency. These high-impact applications demonstrate the power of RL, and employers seek candidates who can contribute to these kinds of innovative projects.

Moreover, RL interview questions are designed to evaluate a candidate’s problem-solving abilities, critical thinking, and creativity—all of which are valuable traits in dynamic, fast-paced tech environments. The complexity of RL scenarios also helps differentiate candidates who have mastered machine learning theory from those who possess a deeper, more nuanced understanding of AI principles.

Data on the Rising Importance of RL Skills in Interviews

Increased Demand: According to Burning Glass Technologies, job postings mentioning reinforcement learning have grown by 38% over the past year.

High Compensation: A 2022 study by Payscale indicated that professionals specializing in RL tend to earn 20-30% more than their counterparts focusing solely on traditional ML.

Recruiter Insights: In a survey conducted by InterviewNode, 60% of ML recruiters mentioned that they are actively seeking candidates with RL experience, citing it as a high-impact skill.

Incorporating RL knowledge into your skill set can set you apart in competitive job markets. Given the upward trend in demand, candidates who can demonstrate both practical and theoretical expertise in RL are well-positioned to secure roles at prestigious companies.

What to Expect in an RL Interview

Candidates interviewing for roles involving RL should be prepared to tackle problems that require more than just coding knowledge. Here’s a glimpse of what RL-related interview questions might entail:

Design Problems: How would you structure the state and action spaces for a drone navigation system?

Algorithm Analysis: Compare and contrast Q-learning with policy gradient methods.

Implementation Challenges: Given a sparse reward environment, how would you alter the training process to ensure convergence?

Answering these types of questions requires a solid understanding of RL algorithms, their limitations, and how to address practical challenges like exploration-exploitation trade-offs or dealing with non-stationary environments.

2. Fundamentals of Reinforcement Learning: A Quick Refresher

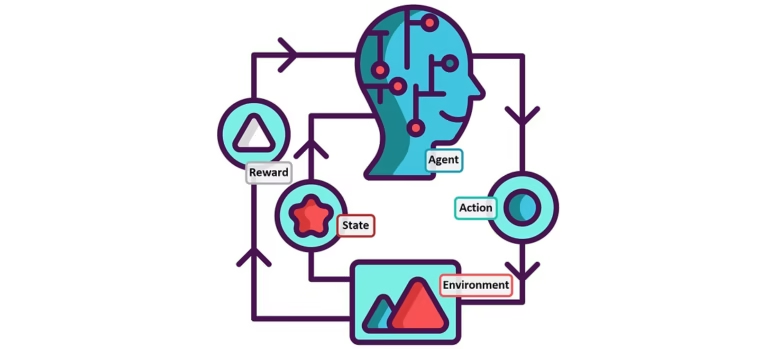

To grasp the real-world applications of reinforcement learning (RL) and its relevance in interviews, it’s important to understand its core principles. RL is a subset of machine learning where an agent learns to make decisions by interacting with an environment. The objective is to maximize cumulative rewards through a trial-and-error approach. Unlike supervised learning, where models learn from labeled data, RL involves learning from feedback and consequences of actions.

Key Concepts in Reinforcement Learning

Agent: The decision-maker that interacts with the environment to learn an optimal behavior.

Environment: The external system that the agent interacts with. It provides feedback based on the agent’s actions.

State: A representation of the current situation of the environment, which helps the agent decide its next action.

Action: A set of choices that the agent can make at each state to influence the environment.

Reward: A scalar feedback signal received from the environment after each action. It indicates how good or bad the action was.

Policy: A strategy that maps states to actions, guiding the agent on which action to take in a given state.

Value Function: A measure of the long-term reward an agent can expect, starting from a particular state and following a certain policy.

Q-Learning: A popular RL algorithm that uses a Q-value to determine the expected utility of actions at each state.

Exploring RL Algorithms

While there are many RL algorithms, the most commonly discussed ones in interviews include:

Q-Learning: A model-free algorithm that learns the quality of actions, making it suitable for environments with discrete state-action spaces.

Deep Q-Networks (DQN): Extends Q-learning by using deep neural networks to approximate the Q-value, making it applicable to environments with large state spaces.

Policy Gradients: Directly optimize the policy without relying on a value function, often used in environments with continuous action spaces.

Actor-Critic Methods: Combine the benefits of both value-based and policy-based methods to achieve faster convergence.

How RL Concepts Translate into Interview Questions

In interviews, understanding these foundational concepts is crucial. A typical question might involve setting up an environment for an RL problem, defining state-action spaces, and determining a suitable reward function. Interviewers may also probe your understanding of exploration-exploitation trade-offs or ask you to modify an algorithm for a specific use case.

By mastering these basics, you’ll be well-prepared to tackle RL-related questions that require both a theoretical and practical understanding.

3. Real-World Applications of Reinforcement Learning

Reinforcement learning has made significant strides in transforming various industries. Understanding its real-world applications not only helps in solving interview problems but also provides insight into the impact of RL in practice. Let’s explore some notable applications:

Gaming: RL’s Dominance in Competitive Environments

RL first gained widespread attention through its success in complex games. AlphaGo, developed by Google DeepMind, utilized RL to defeat a world champion Go player—a game previously considered too complex for AI. RL agents have also excelled in games like Dota 2 and StarCraft, demonstrating strategic planning, real-time decision-making, and adaptability.

AlphaGo Zero: This RL-based model learned to master the game of Go by playing against itself, with no prior knowledge. It surpassed human-level performance within days.

Dota 2 and OpenAI Five: Using RL, OpenAI developed agents that achieved superhuman performance by learning teamwork and real-time strategy.

These successes have paved the way for RL’s adoption in environments requiring complex, sequential decision-making.

Robotics: Enabling Intelligent and Autonomous Systems

In robotics, RL is used to teach robots to perform tasks ranging from walking and grasping to complex assembly tasks. Companies like Boston Dynamics have leveraged RL to develop robots that can navigate dynamic environments, adapt to obstacles, and recover from falls.

Motion Planning: RL enables robots to determine optimal paths for movement, avoiding obstacles and minimizing energy consumption.

Manipulation Tasks: RL helps robots learn to manipulate objects, a key requirement for industrial automation and service robots.

This application is frequently discussed in interviews for robotics and autonomous systems roles, as it requires candidates to think through safety, efficiency, and adaptability.

Finance: Reinforcing Investment Strategies and Risk Management

In the finance industry, RL is employed to create dynamic trading strategies and manage portfolios. It optimizes decisions like asset allocation and trade execution in response to market changes. RL models, unlike traditional models, can dynamically adjust to volatility and changing market conditions.

Portfolio Management: RL algorithms balance the trade-off between risk and reward, aiming to achieve optimal portfolio returns.

Automated Trading: RL-driven trading bots have outperformed traditional strategies by learning from high-frequency trading data and predicting price movements.

Interview questions often revolve around designing reward functions that reflect financial goals or simulating trading environments.

Healthcare: Personalizing Treatments and Drug Discovery

Healthcare has seen an increased adoption of RL to improve patient outcomes and optimize treatment plans. Personalized treatment strategies and drug discovery processes benefit significantly from RL’s ability to navigate complex decision spaces.

Treatment Recommendations: RL can model patient response to different treatment options and suggest personalized treatment plans.

Drug Discovery: RL is used to identify potential drug candidates by exploring chemical space and predicting molecule efficacy.

This application may appear in interview case studies, where candidates are asked to design an RL-based solution to a healthcare problem.

Autonomous Systems: Self-Driving Cars and Drones

Autonomous systems rely on RL for navigation, obstacle avoidance, and decision-making. Companies like Waymo and Tesla are using RL to enhance the driving experience, enabling cars to learn how to navigate roads safely and efficiently.

Self-Driving Cars: RL helps cars learn to navigate in diverse conditions, understand traffic rules, and avoid collisions.

Drones: RL-powered drones can perform tasks like surveillance, delivery, and inspection, adapting to dynamic environments.

These applications are highly relevant to interviews focused on control systems and autonomous navigation.

4. Reinforcement Learning Use Cases Relevant to Interview Questions

When preparing for RL interviews, it’s essential to understand how real-world use cases translate into interview scenarios. Here are some common themes that are likely to be tested:

1. Reward Function Design and Optimization

Designing a reward function is one of the most critical aspects of an RL problem. Poorly designed reward functions can lead to undesirable agent behaviors. Interviewers might ask you to propose a reward function for a given problem and discuss the potential trade-offs.

Interview Example: "How would you design a reward function for a robot that needs to sort colored balls into different bins, considering efficiency and accuracy?"

Key Considerations: Sparse rewards, delayed rewards, and shaping the reward to promote desired behavior.

2. Dealing with Sparse Rewards

In many real-world scenarios, agents receive rewards only after completing a sequence of actions, leading to sparse feedback. Interview questions might focus on strategies to tackle this challenge, such as using reward shaping or intrinsic motivation.

Interview Example: "If an agent receives a reward only at the end of a maze, how can you modify the learning process to improve convergence?"

Approach: Techniques like Hindsight Experience Replay (HER) or defining subgoals can be effective solutions.

3. Multi-Agent Reinforcement Learning

Multi-agent RL involves multiple agents learning and interacting within the same environment. This scenario is commonly used in game-playing AI or collaborative robotics.

Interview Example: "Design an RL system for two drones that must collaborate to carry a heavy object across a room without dropping it."

Challenges: Coordination, communication, and handling competing objectives between agents.

4. Ethical Considerations and Fairness in RL

RL systems must operate fairly and without bias, especially in critical applications like healthcare or finance. Interviewers may ask candidates to discuss the ethical implications of their RL model or propose safeguards to prevent biased decision-making.

Interview Example: "How would you ensure that an RL model used for loan approval does not exhibit bias against certain demographic groups?"

Solutions: Techniques like adversarial training, fairness constraints, and auditing the policy’s decision-making process.

5. Applying RL to Optimize Resource Allocation

Resource allocation problems, such as optimizing cloud resource usage or scheduling manufacturing tasks, are ideal for RL. Interviewers might present scenarios that require designing an RL solution to maximize resource utilization while minimizing costs.

Interview Example: "Propose an RL solution to allocate computing resources in a data center dynamically based on changing demand."

Approach: Techniques like Deep Q-Networks or Policy Gradient methods can be effective.

5. How to Approach RL Problems in Interviews

Reinforcement learning interview questions often require a structured approach to solve complex problems. Here’s a step-by-step guide to help you tackle RL problems effectively:

Step 1: Understand the Problem Statement

Before diving into code or algorithms, ensure you fully understand the problem and the desired outcome. Interviewers typically present scenarios that have multiple decision points, making it crucial to clarify the following:

Environment Specifications: What are the state and action spaces? Is the problem discrete or continuous?

Reward Structure: How are rewards assigned? Are they sparse or dense? Are there any potential pitfalls in the reward design?

Constraints and Trade-Offs: Are there any resource limitations, ethical considerations, or business-specific constraints?

For example, in a problem where a robot has to navigate a grid, you need to define what constitutes a successful completion (e.g., reaching the goal) and the penalties for taking wrong actions (e.g., bumping into obstacles).

Step 2: Choose the Right Algorithm for the Problem

Selecting the right RL algorithm is essential, as different algorithms perform better in certain types of environments. Here’s a brief guide:

Q-Learning: Ideal for problems with discrete state and action spaces. Suitable for grid-world scenarios or small-scale environments.

Deep Q-Networks (DQN): Useful when the state space is too large for a traditional Q-table, such as in image-based inputs or high-dimensional data.

Policy Gradients: Effective for continuous action spaces or environments where Q-values are difficult to estimate.

Actor-Critic Methods: A good choice for environments with complex interactions, such as multi-agent systems or environments with high-dimensional inputs.

Consider an interview question like: “Design an RL solution for a drone that needs to navigate a dynamic environment.” In this case, you might choose a policy gradient method, as it handles continuous action spaces more effectively.

Step 3: Define the State and Action Spaces

In interviews, defining the state and action spaces correctly is often half the battle. States should capture all relevant information needed for decision-making, while actions should represent feasible choices the agent can take. For instance:

State Space for Self-Driving Car: Position, speed, distance to obstacles, traffic light state, etc.

Action Space: Acceleration, deceleration, steering angle.

For complex problems, decomposing the state space into meaningful features is crucial. This step tests your ability to understand and simplify real-world problems into manageable components.

Step 4: Implementation and Optimization

Once you have a clear understanding of the problem and chosen algorithm, focus on implementation. Interviews may involve coding tasks where you have to implement an algorithm from scratch or optimize an existing solution. Be mindful of these key areas:

Hyperparameter Tuning: Learning rate, exploration-exploitation parameters, discount factors, etc.

Training Stability: Ensure that the training process converges by monitoring the agent’s performance over time.

Handling Overfitting: Use techniques like regularization, dropout, or increasing exploration to avoid overfitting.

Step 5: Test and Iterate

Test your solution thoroughly to ensure it performs well across different scenarios. Explain any assumptions you made and how you addressed potential limitations.

Interview Tip: If the interviewer asks, “What would you do if your model fails to converge?”, be prepared to discuss alternative algorithms, reward function modifications, or state/action space changes.

By following these steps, you’ll demonstrate a comprehensive approach to solving RL problems, which is exactly what interviewers are looking for.

6. Tools and Resources to Master RL for Interviews

Preparing for RL interviews requires access to the right resources. Here’s a curated list of tools, libraries, and learning platforms to help you build a strong foundation:

Reinforcement Learning Libraries

OpenAI Gym: A widely used toolkit for developing and comparing RL algorithms. It provides various environments, from classic control problems to complex tasks like robotic simulation.

Ray RLlib: A scalable RL library that supports a wide range of algorithms. Ideal for working on large-scale projects or training multiple agents simultaneously.

Stable Baselines3: A set of high-quality implementations of popular RL algorithms, perfect for quick experimentation and testing.

TensorFlow Agents: A library built on TensorFlow, offering flexibility to experiment with different RL approaches and architectures.

Courses and Books

Courses:

Deep Reinforcement Learning Nanodegree (Udacity): Offers comprehensive coverage of RL topics, from basic Q-learning to advanced policy gradient methods.

Practical RL (Coursera): Focuses on hands-on problem-solving and practical applications of RL.

CS285: Deep Reinforcement Learning (UC Berkeley): An advanced course for those looking to dive deep into RL research.

Books:

“Reinforcement Learning: An Introduction” by Sutton and Barto: The quintessential book on RL, covering both foundational concepts and advanced topics.

“Deep Reinforcement Learning Hands-On” by Maxim Lapan: Offers practical guidance on implementing RL solutions using Python and PyTorch.

Mock Interview Platforms

LeetCode: While primarily focused on general coding problems, LeetCode’s premium subscription includes ML-specific questions.

InterviewNode: Provides tailored mock interviews, curated RL problems, and feedback from industry experts to help you prepare for RL interviews at top companies.

Kaggle: Participate in RL competitions to gain hands-on experience and improve your problem-solving skills.

These resources can serve as a strong foundation, helping you gain both theoretical knowledge and practical experience.

7. How InterviewNode Can Help You Prepare for These Interviews

InterviewNode specializes in preparing candidates for RL interviews through a comprehensive and personalized approach. Here’s how we can help you:

1. Personalized Mock Interviews

Our mock interviews simulate real-world interview scenarios, focusing on RL-specific problems that are often encountered at top tech companies. During these sessions, you’ll receive feedback on both your coding and conceptual understanding, helping you refine your approach.

Benefit: Identify your strengths and weaknesses, and receive actionable feedback from seasoned professionals.

2. One-on-One Mentorship

We connect you with mentors who have successfully navigated RL interviews and landed roles at companies like Google, Facebook, and Tesla. Our mentors provide insights into what to expect, how to structure your answers, and how to approach complex RL problems.

Benefit: Gain industry-specific knowledge and advice from experts who understand the interview process firsthand.

3. Curated Problem Sets and Learning Materials

Our problem sets are designed to cover a range of RL topics, from basic algorithms to advanced multi-agent scenarios. You’ll also gain access to curated learning materials, including tutorials, research papers, and implementation guides.

Benefit: Build a solid understanding of RL and practice on problems that mirror real interview questions.

4. Interview Readiness Assessments

We offer assessments to gauge your readiness for RL interviews. These assessments include coding problems, conceptual quizzes, and mock case studies to ensure you’re fully prepared.

Benefit: Benchmark your performance and identify areas for improvement before the actual interview.

5. Success Stories and Testimonials

Many of our clients have gone on to secure roles at leading companies like Google and Amazon. Our structured approach has consistently delivered results, helping candidates land their dream jobs.

Customer Testimonial: “Thanks to InterviewNode’s mock interviews and detailed feedback, I was able to confidently answer RL questions and secure a position at a top tech company.”

At InterviewNode, we are dedicated to helping you succeed. Our holistic approach ensures that you’re not only prepared for RL questions but also equipped with the skills to excel in your career.

8. Final Tips and Best Practices for RL Interviews

Here are some final tips and best practices to keep in mind when preparing for RL interviews:

Master the Basics: Ensure you have a solid understanding of RL fundamentals, such as Q-learning, policy gradients, and actor-critic methods. Brush up on foundational topics to build a strong knowledge base.

Practice Coding RL Algorithms: Implement RL algorithms from scratch in Python using libraries like NumPy and TensorFlow. This will help reinforce your understanding and prepare you for coding questions.

Explain Your Thought Process: Clearly articulate your thought process when answering conceptual questions. Interviewers value candidates who can explain complex topics in simple terms.

Prepare for Open-Ended Questions: Be ready to discuss how you would apply RL to a new problem. Think creatively and explore different approaches.

Stay Updated with Recent Advances: Keep up with the latest research in RL by following top conferences like NeurIPS, ICML, and ICLR.

9. Conclusion: Why Reinforcement Learning is Here to Stay

Reinforcement learning is poised to play a pivotal role in the future of AI, with its applications extending far beyond academic research. From gaming and robotics to healthcare and finance, RL is revolutionizing industries and creating new opportunities for those skilled in its principles.

Mastering RL can significantly boost your career prospects, especially if you’re aiming for roles at top tech companies. With the right preparation and guidance, such as that offered by InterviewNode, you can confidently navigate RL interview questions and demonstrate your expertise.

The demand for RL professionals will continue to grow as more companies adopt AI-driven solutions. By honing your RL skills and staying informed about industry trends, you’ll be well-positioned to contribute to groundbreaking projects and advance your career.