1. Introduction

In the fast-evolving world of machine learning (ML), building models that generalize well to unseen data is a crucial skill. A model's performance is often judged by how well it predicts on both the training data and new, unseen data. However, the challenge lies in managing two fundamental sources of error: bias and variance. This is where the bias-variance tradeoff in machine learning comes in, a key concept every ML engineer must grasp, especially when preparing for interviews at top companies like Google, Facebook, or Amazon.

The bias-variance tradeoff is all about balancing simplicity and complexity in a model. Too simple, and the model won't learn enough from the data (high bias). Too complex, and it might memorize the training data, capturing noise along with useful patterns (high variance). This blog will take you through everything you need to know about the bias-variance tradeoff, from definitions and examples to techniques for managing it. You'll also get tips for answering typical interview questions on this topic.

2. What is Bias?

Bias in machine learning refers to the error introduced by simplifying assumptions made by the model. These assumptions help the model generalize better but can also lead to underfitting, where the model cannot capture the complexity of the data.

Key Characteristics of Bias:

High bias means the model is too simple to understand the patterns in the data.

Models prone to high bias: Linear regression, shallow decision trees.

Consequences: The model consistently performs poorly on both training and test data, leading to inaccurate predictions.

Example: Imagine a linear regression model trying to predict house prices based on square footage, number of bedrooms, and other features. If the model is too simple (e.g., considering only square footage), it may miss the nuanced relationships between the other features and the target variable. This would lead to high bias, and the model would underfit the data.

Detecting High Bias:

Poor performance on both the training and validation datasets.

Minimal difference between the training and test errors.

Simplistic model that fails to capture underlying data trends.

To address high bias, consider increasing model complexity by adding more features or selecting a more sophisticated model like decision trees or neural networks.

3. What is Variance?

Variance in machine learning refers to the model’s sensitivity to the small fluctuations in the training data. A high-variance model will often perform well on the training data but poorly on unseen data, a clear sign of overfitting.

Key Characteristics of Variance:

High variance means the model is too sensitive to noise in the training data, memorizing details rather than learning general patterns.

Models prone to high variance: Deep decision trees, deep neural networks.

Consequences: Overfitting, where the model performs exceptionally well on training data but generalizes poorly to new, unseen data.

Example: In contrast to high bias, imagine a deep decision tree that splits data based on small nuances. This model might perform perfectly on the training data but will likely perform poorly on validation or test datasets due to its tendency to overfit to the training data.

Detecting High Variance:

Large gap between training and validation/test error (training error is low, but validation/test error is high).

The model performs well on the training set but fails to generalize.

To mitigate high variance, strategies such as regularization, cross-validation, or ensemble techniques like bagging can help improve generalization.

4. Understanding the Tradeoff

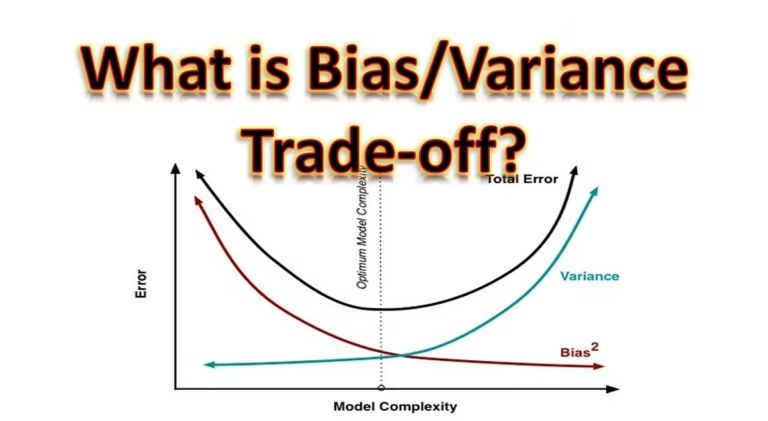

The bias-variance tradeoff describes the delicate balance between bias and variance that machine learning professionals must navigate. Reducing bias often increases variance, and vice versa.

Model Complexity and the Tradeoff:

Simple models (e.g., linear regression) tend to have high bias and low variance, often underfitting the data.

Complex models (e.g., deep neural networks) have low bias but high variance, with a tendency to overfit.

The tradeoff is about finding the “sweet spot” where both bias and variance are balanced, minimizing the total error (which is the sum of bias squared, variance, and irreducible error).

Real-World Example:

Consider a housing price prediction problem:

High Bias: A linear regression model might underfit, as it may not capture nonlinear relationships like the effect of location, market trends, or proximity to amenities.

High Variance: A deep neural network trained on limited data could overfit, memorizing the prices of specific houses rather than generalizing price trends.

The U-shaped Error Curve demonstrates this tradeoff. As model complexity increases, the bias decreases, but variance increases. The total error curve first drops as the model becomes more flexible, but after a certain point, it rises due to overfitting.

5. Techniques to Manage the Bias-Variance Tradeoff

Managing the bias-variance tradeoff is a balancing act that requires a mix of strategies. Here are some effective techniques:

Cross-Validation:

What it is: A technique used to evaluate model performance on unseen data by splitting the dataset into subsets.

K-Fold Cross-Validation: One of the most common methods, where the data is divided into 'k' subsets, and the model is trained 'k' times, each time using a different subset as the validation set and the rest as training.

Impact: Helps assess generalization performance, reducing both variance and bias by averaging results over multiple folds.

Regularization:

L1 Regularization (Lasso): Adds a penalty proportional to the absolute value of coefficients, pushing irrelevant feature weights to zero.

L2 Regularization (Ridge): Penalizes the square of the coefficients, shrinking the weights but not eliminating them entirely.

Impact: Regularization techniques help control model complexity, reducing overfitting and variance while maintaining accuracy.

Ensemble Methods:

Bagging: Combines multiple versions of a model trained on different subsets of data to reduce variance. Example: Random Forest.

Boosting: Sequentially builds models by correcting errors of previous models, effectively reducing bias.

Impact: Both methods help strike a balance between bias and variance, improving the robustness and performance of the model.

Hyperparameter Tuning:

Using grid search or random search, you can adjust hyperparameters like learning rates, tree depths, or regularization strengths to find the optimal configuration that balances bias and variance.

6. Real-World Examples of the Bias-Variance Tradeoff

1. Healthcare Diagnosis:

A simple logistic regression model might underfit (high bias) in diagnosing diseases by oversimplifying the factors involved.

Conversely, a complex model, like a deep neural network trained on limited data, might overfit (high variance), capturing patterns unique to the training set but not generalizable to new patients.

2. Financial Forecasting:

High-bias models might miss out on profitable opportunities by making overly conservative predictions.

High-variance models, on the other hand, might make erratic predictions based on fluctuations, leading to substantial financial losses.

7. Typical Bias-Variance Interview Questions and Answers

Here are some commonly asked interview questions related to bias-variance, along with sample answers:

Question 1: What is the bias-variance tradeoff in simple terms?

Answer: The bias-variance tradeoff is the balancing act between a model being too simple (high bias, underfitting) and too complex (high variance, overfitting). A model should neither oversimplify nor overfit the data.

Question 2: How can you detect overfitting in a model?

Answer: Overfitting is detected when a model performs exceptionally well on the training data but poorly on validation or test data. Techniques like cross-validation and looking at performance metrics can help identify overfitting.

Question 3: How would you handle a model with high bias?

Answer: To reduce bias, I would increase the model’s complexity, perhaps by adding features, selecting a more complex algorithm, or using ensemble methods like boosting.

Question 4: What are some techniques to reduce variance in a high-variance model?

Answer: To reduce variance, I would apply regularization techniques like Lasso or Ridge, use ensemble methods like bagging, or introduce cross-validation.

Question 5: How does model complexity relate to the bias-variance tradeoff?

Answer: As model complexity increases, bias typically decreases because the model captures more details in the data. However, this also leads to higher variance as the model may start overfitting, making it crucial to find the right balance.

8. Conclusion

Understanding the bias-variance tradeoff is critical for building models that generalize well to new data. By managing model complexity and applying techniques like regularization, cross-validation, and ensemble methods, you can strike a balance between bias and variance. For software engineers preparing for machine learning interviews, mastering this concept is essential for demonstrating deep ML knowledge and problem-solving skills.