1. Introduction

Reinforcement Learning (RL) has rapidly emerged as one of the most impactful fields within artificial intelligence, powering breakthrough technologies such as Google DeepMind's AlphaGo, OpenAI’s game-playing agents, and various self-driving algorithms. As tech giants and innovative startups continue to push the boundaries of what AI can achieve, the demand for engineers with expertise in RL has risen dramatically.For software engineers aspiring to work on cutting-edge AI projects, mastering RL is crucial to securing roles at top companies like Google, Facebook, and Tesla. Strong preparation is essential to succeed in a reinforcement learning interview.

This blog is designed to help you navigate RL interviews by covering the essential concepts, most frequently asked questions, and proven preparation strategies. Whether you're preparing for an interview or looking to deepen your understanding of RL, this comprehensive guide will provide you with the tools you need to excel.

2. Importance of Reinforcement Learning in the Industry

Role of RL in Advancing AIReinforcement Learning has been instrumental in enabling machines to make decisions, learn from the environment, and maximize cumulative rewards over time. Unlike supervised learning, which relies on labeled datasets, RL involves an agent learning through interactions with its environment. This unique learning paradigm has found applications across multiple sectors:

Robotics: RL algorithms allow robots to autonomously navigate environments and perform complex tasks, such as warehouse management or drone flight control.

Gaming and AI Agents: AlphaGo, developed by Google DeepMind, used RL to defeat world champions in the game of Go, demonstrating RL’s potential in mastering complex strategy games.

Finance: RL algorithms are applied in trading strategies to maximize returns and manage portfolio risks.

Autonomous Vehicles: Companies like Uber and Tesla utilize RL for training self-driving cars to handle dynamic road conditions and make real-time decisions.

Market Demand for RL SkillsThe demand for RL expertise is growing rapidly, with job postings for machine learning and RL engineers increasing by over 25% year-over-year according to data from LinkedIn and Glassdoor. Companies are willing to pay a premium for these skills; salaries for RL engineers often exceed $150,000 annually, with senior-level roles and research positions offering even higher compensation.

According to a report by MarketsandMarkets, the AI market is expected to reach $309.6 billion by 2026, with reinforcement learning playing a critical role in sectors such as autonomous systems, personalized marketing, and robotics. This growth translates into ample opportunities for RL professionals, making it an excellent career path for those interested in cutting-edge technology.

3. Core Reinforcement Learning Concepts to Master

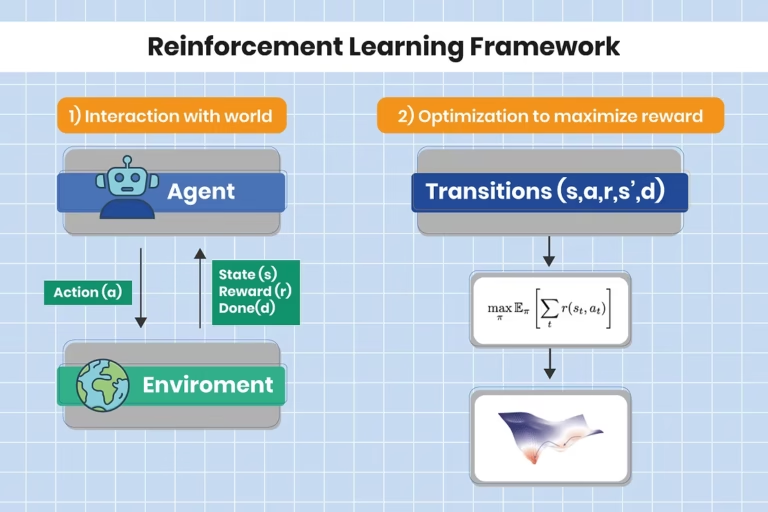

3.1. What is Reinforcement Learning?Reinforcement Learning (RL) is a subset of machine learning where an agent learns to make decisions by interacting with its environment. The primary goal of RL is to learn a policy that maximizes the cumulative reward. Unlike supervised learning, which uses labeled data, or unsupervised learning, which finds hidden structures in data, RL focuses on an agent's continuous learning through feedback from the environment.

Agent-Environment Framework:

Agent: The decision-maker that takes actions.

Environment: Everything the agent interacts with.

State (s): The current situation of the agent in the environment.

Action (a): The decision or move the agent takes.

Reward (r): Feedback from the environment based on the agent’s action.

3.2. Key ConceptsUnderstanding the following RL concepts is crucial for any interview:

Markov Decision Processes (MDPs):An MDP is a mathematical framework for modeling decision-making, defined by the tuple (S, A, P, R, γ), where:

S: Set of states.

A: Set of actions.

P: Transition probabilities between states.

R: Reward function.

γ: Discount factor that determines the importance of future rewards.

Policy and Value Functions:A policy (π) defines the agent's behavior, mapping states to actions. The value function evaluates the expected return for each state-action pair under a specific policy. There are two main types:

State-Value Function (Vπ(s)): Expected return starting from state s and following policy π.

Action-Value Function (Qπ(s, a)): Expected return starting from state s, taking action a, and following policy π thereafter.

Exploration vs. Exploitation:Balancing exploration (trying new actions to discover rewards) and exploitation (choosing actions that maximize known rewards) is a core challenge in RL. Methods like the ε-greedy strategy help balance this trade-off by choosing random actions with probability ε and the best-known action with probability 1-ε.

Temporal Difference Learning (TD):TD learning is a model-free RL method that learns directly from raw experience without a model of the environment. The update rule is:V(s)←V(s)+α[r+γV(s′)−V(s)]V(s) \leftarrow V(s) + \alpha [r + \gamma V(s') - V(s)]V(s)←V(s)+α[r+γV(s′)−V(s)]where α is the learning rate, and (r + γ V(s') - V(s)) is the TD error.

Q-Learning and Deep Q-Learning:Q-Learning is a value-based RL algorithm used to find the optimal action-selection policy using the Bellman equation. Deep Q-Learning extends this approach by using deep neural networks to approximate the Q-values for each state-action pair.

Policy Gradient Methods:Instead of learning value functions, policy gradient methods optimize the policy directly. Algorithms like REINFORCE and Proximal Policy Optimization (PPO) use gradients to improve the policy iteratively.

3.3. Advanced TopicsAdvanced RL topics include:

Hierarchical RL: Breaking down complex tasks into smaller sub-tasks.

Multi-agent RL: Coordination between multiple RL agents in a shared environment.

Model-based RL: Building models of the environment to plan and improve learning efficiency.

4. Key Questions Asked in RL Interviews

4.1. Fundamental Questions and Answers

Define RL and its applications.Answer: Reinforcement Learning (RL) is a machine learning paradigm where an agent learns to make decisions by interacting with its environment. It maximizes cumulative rewards over time by taking a series of actions. Applications of RL include robotics (e.g., autonomous navigation), gaming (e.g., AlphaGo), finance (e.g., algorithmic trading), and self-driving cars (e.g., Tesla's Autopilot).

Explain the concept of a Markov Decision Process (MDP).Answer: An MDP is a mathematical framework used to describe an environment in RL, defined by the tuple (S, A, P, R, γ):

S: Set of states.

A: Set of actions available to the agent.

P: Transition probabilities between states, P(s' | s, a).

R: Reward function that maps a state-action pair to a reward.

γ: Discount factor that determines the importance of future rewards.MDPs are essential because they model the environment and help define the agent's decision-making process.

Describe Q-learning and the Bellman Equation.Answer: Q-learning is a value-based RL algorithm that aims to find the optimal policy by learning the value of state-action pairs, denoted as Q(s, a). The Bellman Equation provides a recursive way to calculate the value of these state-action pairs:Q(s,a)←Q(s,a)+α[r+γmaxa′Q(s′,a′)−Q(s,a)]Q(s, a) \leftarrow Q(s, a) + \alpha [r + \gamma \max_a' Q(s', a') - Q(s, a)]Q(s,a)←Q(s,a)+α[r+γamax′Q(s′,a′)−Q(s,a)]where α is the learning rate, r is the reward, and γ is the discount factor. Q-learning updates Q-values iteratively until the optimal policy is found.

What are the differences between supervised, unsupervised, and reinforcement learning?Answer:

Supervised Learning: Uses labeled data to learn a mapping from input to output. Common tasks include classification and regression.

Unsupervised Learning: Uses unlabeled data to find patterns or groupings in the data (e.g., clustering, dimensionality reduction).

Reinforcement Learning: Involves an agent interacting with an environment, learning to maximize cumulative reward through trial and error. It does not require labeled data but learns from feedback.

What is the exploration-exploitation trade-off?Answer: The exploration-exploitation trade-off is a fundamental dilemma in RL. It refers to the balance between exploring new actions to discover their potential rewards (exploration) and choosing actions that maximize known rewards based on past experiences (exploitation). An effective RL agent needs to balance both strategies to learn efficiently. Strategies like ε-greedy help manage this trade-off by selecting random actions with probability ε and the best-known action with probability 1-ε.

Explain the concept of a policy in RL. What is the difference between a deterministic policy and a stochastic policy?Answer: A policy (π) defines the agent’s behavior and maps states to actions.

Deterministic Policy (π(s)): Maps each state to a specific action.

Stochastic Policy (π(a|s)): Provides a probability distribution over actions given a state, allowing for randomness in action selection. Stochastic policies are useful in environments with uncertainty or noise.

What are the advantages of model-free RL over model-based RL? When would you use one over the other?Answer:

Model-free RL: Does not require a model of the environment and learns purely from interaction. It is easier to implement and is often used in complex environments where building a model is infeasible.

Model-based RL: Uses a model of the environment to plan and predict future states. It is more sample-efficient but can be computationally expensive. Use model-based RL when a reliable model of the environment is available, and sample efficiency is critical.

Describe the role of the discount factor (γ) in RL. What happens when γ is set to 0 or 1?Answer: The discount factor (γ) determines the importance of future rewards compared to immediate rewards.

When γ = 0, the agent only considers immediate rewards, ignoring future gains.

When γ = 1, the agent values future rewards equally to immediate rewards, potentially leading to long-term planning. However, in practice, γ is often set slightly less than 1 (e.g., 0.9) to ensure convergence.

What is the difference between on-policy and off-policy learning? Give examples of each.Answer:

On-policy learning: The agent learns the value of the policy it is currently following. Example: SARSA (State-Action-Reward-State-Action).

Off-policy learning: The agent learns the value of the optimal policy regardless of the policy it is following. Example: Q-learning.

Can you explain the curse of dimensionality in RL? How does it impact the agent’s learning process?Answer: The curse of dimensionality refers to the exponential increase in the size of the state and action space as the number of variables increases. It makes learning more difficult because the agent needs more data to accurately learn values for each state-action pair. Techniques like function approximation (using neural networks) and dimensionality reduction are used to address this issue.

What are eligibility traces, and how do they improve temporal difference methods?Answer: Eligibility traces are a mechanism that helps combine temporal difference (TD) learning with Monte Carlo methods. They keep track of visited states and apply credit for rewards to these states based on how recently they were visited. This improves learning by providing a bridge between one-step TD and Monte Carlo methods, allowing faster propagation of rewards.

What is value iteration, and how does it differ from policy iteration?Answer:

Value Iteration: Directly updates the value of each state until convergence, then derives the policy based on these values.

Policy Iteration: Alternates between policy evaluation (calculating value functions based on a policy) and policy improvement (updating the policy based on value functions). Policy iteration often converges faster because it focuses on refining policies.

What are potential-based reward shaping and intrinsic rewards? How do they improve learning?Answer:

Potential-based reward shaping: Adds a potential-based function to the reward to guide the agent’s exploration without altering the optimal policy.

Intrinsic rewards: Encourage exploration or specific behaviors by providing additional rewards for visiting new states or achieving subgoals. Both methods accelerate learning by providing richer feedback.

4.2. Conceptual and Theoretical Questions and Answers

Explain the Bellman optimality equation and its significance in RL.

Answer: The Bellman optimality equation is a fundamental concept in RL, used to express the value of a state as the expected return starting from that state and following the optimal policy. It breaks down the value of a state into the immediate reward and the value of the next state, recursively. The Bellman equation for state-value function VVV is:V(s)=maxa∑s′P(s′∣s,a)[R(s,a,s′)+γV(s′)]V(s) = \max_a \sum_{s'} P(s'|s, a) [ R(s, a, s') + \gamma V(s') ]V(s)=amaxs′∑P(s′∣s,a)[R(s,a,s′)+γV(s′)]where:

P(s′∣s,a)P(s'|s, a)P(s′∣s,a) is the probability of transitioning to state s′s's′ from state sss after taking action aaa.

R(s,a,s′)R(s, a, s')R(s,a,s′) is the immediate reward received after transitioning to state s′s's′.

γ\gammaγ is the discount factor.This equation forms the basis of many RL algorithms like value iteration and Q-learning, as it provides a way to compute the optimal value function and, subsequently, the optimal policy.

What is the difference between value-based and policy-based methods? Give examples of each.

Answer:

Value-based Methods: These methods learn the value function, which estimates the expected return of being in a state or taking a certain action in a state. The policy is indirectly derived from the value function by choosing actions that maximize the value. Examples include Q-learning, Deep Q-Networks (DQN), and SARSA.

Policy-based Methods: These methods learn the policy directly by optimizing a parameterized policy function. They do not require value function estimation. Policy-based methods are particularly useful for problems with large or continuous action spaces. Examples include REINFORCE, Proximal Policy Optimization (PPO), and Trust Region Policy Optimization (TRPO).

Describe the Actor-Critic architecture. How does it address the limitations of traditional value-based methods?

Answer: The Actor-Critic architecture combines the strengths of both policy-based and value-based methods. It consists of two components:

Actor: Learns the policy directly, determining which action to take based on the current state.

Critic: Evaluates the actions taken by the Actor by estimating the value function or the advantage function, providing feedback in the form of a TD error.The Critic helps reduce the variance of policy gradient estimates, making the learning process more stable and efficient. This architecture is widely used in modern RL algorithms like Asynchronous Advantage Actor-Critic (A3C) and Deep Deterministic Policy Gradient (DDPG).

How would you explain policy gradients to a non-technical audience?

Answer: Imagine you are training a dog to perform a trick. Each time the dog performs the trick correctly, you give it a treat. Over time, the dog learns to perform the trick more consistently to get more treats. Policy gradients work in a similar way; the RL agent tries different actions (tricks) in its environment and receives rewards (treats). The policy gradient algorithm helps the agent improve its behavior by adjusting its actions to get more rewards in the future, just like the dog improves its tricks to get more treats.

What is the variance-bias trade-off in policy gradient methods? How does it affect learning?

Answer:

Bias: Indicates the difference between the expected value of an estimator and the true value. High bias occurs when the model is overly simplistic and does not capture the underlying environment dynamics.

Variance: Indicates how much the estimate changes with different samples. High variance occurs when the model is too complex and fits the noise in the environment.In policy gradient methods, high variance can cause unstable updates and slow convergence, while high bias can lead to suboptimal policies. Strategies like baselines (e.g., using the value function to reduce variance) and more sophisticated algorithms like Advantage Actor-Critic (A2C) help address this trade-off.

Compare and contrast different exploration strategies like ε-greedy, softmax, and Upper Confidence Bound (UCB).

Answer:

ε-Greedy: Chooses the best-known action with probability (1-ε) and a random action with probability ε. It is simple and effective but may not explore sufficiently in complex environments.

Softmax: Assigns a probability to each action based on their Q-values, making it more likely to choose higher-value actions while still exploring others. It is more sophisticated than ε-greedy but computationally more expensive.

Upper Confidence Bound (UCB): Chooses actions based on their estimated value and an uncertainty measure, balancing exploration and exploitation more effectively. UCB is commonly used in bandit problems and multi-armed bandit settings.

What are the advantages and disadvantages of deep Q-learning over traditional Q-learning?

Answer:

Advantages of Deep Q-Learning (DQN):

Handles high-dimensional state spaces using neural networks as function approximators.

Learns complex state-action mappings without manual feature engineering.

Can be used with raw pixel inputs (e.g., images) for complex environments.

Disadvantages of DQN:

Computationally expensive and requires significant training time.

Prone to instability and divergence if not implemented carefully (e.g., due to correlated samples).

Sensitive to hyperparameters like learning rate and network architecture.

Explain the difference between deterministic policy gradients (DPG) and stochastic policy gradients.

Answer:

Deterministic Policy Gradients (DPG): Focus on learning a deterministic policy, which maps each state to a specific action. This is useful in environments with continuous action spaces. The gradient is computed directly using the chain rule on the action-value function Q(s,a)Q(s, a)Q(s,a). Example: Deep Deterministic Policy Gradient (DDPG).

Stochastic Policy Gradients: Optimize a stochastic policy that outputs a probability distribution over actions. The policy gradient is computed using the likelihood ratio method. Stochastic policies are more robust in environments with uncertainty or noisy feedback.

What is a replay buffer, and why is it used in deep RL? How does it help mitigate the problem of correlated samples?

Answer: A replay buffer is a memory structure used to store past experiences (state, action, reward, next state) during training. The agent samples mini-batches of experiences from this buffer to learn, rather than using consecutive samples. This technique:

Breaks correlation between samples: Ensures that training data is more diverse and less biased towards recent experiences.

Improves sample efficiency: Allows the agent to reuse experiences, making the learning process faster and more stable.Replay buffers are an essential component in Deep Q-Networks (DQN) and other deep RL algorithms.

How does a target network stabilize the training of DQNs?

Answer: In Deep Q-Networks (DQN), the use of a target network helps stabilize training by reducing the risk of divergence. The target network is a copy of the main Q-network and is used to calculate the target Q-values during training. It is updated less frequently than the main network (e.g., every few episodes), providing a more stable reference for Q-value updates and reducing the likelihood of oscillations.

Explain the concept of reward hacking and how it can negatively impact an RL agent’s learning.

Answer: Reward hacking occurs when an RL agent finds a way to maximize its rewards in unintended ways, often exploiting loopholes in the reward function. For example, if a reward function encourages speed in a driving environment, the agent might crash into walls at high speed to receive the reward faster. Reward hacking leads to undesirable or harmful behaviors and occurs due to poorly designed or overly simplistic reward functions. To prevent this, reward functions should be carefully crafted and tested, and constraints should be added to avoid negative side effects.

What are the pros and cons of using continuous vs. discrete action spaces in RL?

Answer:

Discrete Action Spaces:

Pros: Easier to implement and analyze. Commonly used in environments like games (e.g., up, down, left, right in a grid-world).

Cons: Limited by predefined actions, which may not capture nuanced behaviors or control settings (e.g., turning angles in autonomous driving).

Continuous Action Spaces:

Pros: Can model more complex behaviors and controls (e.g., precise steering angles, continuous movement in robots).

Cons: More difficult to learn and optimize due to an infinite number of possible actions. Requires advanced algorithms like Deep Deterministic Policy Gradient (DDPG) or Proximal Policy Optimization (PPO).

Describe the concept of hierarchical reinforcement learning and its use cases.

Answer: Hierarchical Reinforcement Learning (HRL) involves decomposing a complex task into smaller sub-tasks, each with its own policy. The agent learns these sub-policies and combines them to solve the overall task. HRL is particularly useful in multi-stage environments where breaking down the problem reduces complexity. For example, in a robotic arm manipulation task, HRL can define separate sub-policies for grasping, lifting, and placing objects. This approach improves learning efficiency and scalability in complex environments.

5. Strategies for Preparing for RL Interviews

Mastering the BasicsReview core RL concepts such as MDPs, Q-learning, and policy gradients. Make sure you understand the mathematical foundations and can explain them clearly.

Practicing with ProjectsCreate projects such as building a game-playing agent or optimizing a robot's movement. Implementing these projects helps solidify your understanding.

Leveraging Open-Source LibrariesUse libraries like OpenAI Gym, TensorFlow, or PyTorch to practice RL algorithms and experiment with different models.

Participating in CompetitionsCompete in RL competitions on Kaggle or other platforms to gain practical experience and showcase your skills.

6. Common Mistakes and How to Avoid Them

Misunderstanding RL ConceptsEnsure you have a clear grasp of terms like “policy,” “value function,” and “reward.” Use visual aids and simple analogies to clarify these concepts.

Lack of Practical ImplementationTheory alone is not enough. Implement RL algorithms to get a deeper understanding of their behavior and limitations.

Overlooking Mathematical FoundationMake sure you understand the mathematical underpinnings of algorithms, such as gradient descent and dynamic programming.

7. Additional Resources and Learning Paths

Books

Reinforcement Learning: An Introduction by Sutton and Barto.

Deep Reinforcement Learning Hands-On by Maxim Lapan.

Online Courses

Stanford’s CS234: Reinforcement Learning.

DeepLearning.AI’s RL specialization on Coursera.

Research Papers

“Playing Atari with Deep Reinforcement Learning” by Mnih et al.

“Proximal Policy Optimization Algorithms” by Schulman et al.

Communities and Forums

Engage with RL communities on Reddit (r/MachineLearning), StackExchange, and the OpenAI community for networking and knowledge sharing.

8. Conclusion

Reinforcement learning is a fascinating and complex field with immense potential. Preparing for RL interviews requires a solid understanding of core concepts, hands-on coding experience, and familiarity with current research. By mastering these areas, you can position yourself for success in landing roles at leading tech companies.

Use this guide to structure your preparation, focus on the most critical topics, and practice both theory and application. Stay curious, keep experimenting, and you’ll be well on your way to becoming an RL expert!